Software/Datasets

GG-SSMs: Graph-Generating State Space Models

State Space Models (SSMs) are powerful tools for modeling sequential data in computer vision and time series analysis domains. However, traditional SSMs are limited by fixed, one-dimensional sequential processing, which restricts their ability to model non-local interactions in high-dimensional data. While methods like Mamba and VMamba introduce selective and flexible scanning strategies, they rely on predetermined paths, which fails to efficiently capture complex dependencies. We introduce Graph-Generating State Space Models (GG-SSMs), a novel framework that overcomes these limitations by dynamically constructing graphs based on feature relationships. Using Chazelle's Minimum Spanning Tree algorithm, GG-SSMs adapt to the inherent data structure, enabling robust feature propagation across dynamically generated graphs and efficiently modeling complex dependencies. We validate GG-SSMs on 11 diverse datasets, including event-based eye-tracking, ImageNet classification, optical flow estimation, and six time series datasets. GG-SSMs achieve state-of-the-art performance across all tasks, surpassing existing methods by significant margins. Specifically, GG-SSM attains a top-1 accuracy of 84.9% on ImageNet, outperforming prior SSMs by 1%, reducing the KITTI-15 error rate to 2.77%, and improving eye-tracking detection rates by up to 0.33% with fewer parameters. These results demonstrate that dynamic scanning based on feature relationships significantly improves SSMs' representational power and efficiency, offering a versatile tool for various applications in computer vision and beyond.

References

Student-Informed Teacher Training

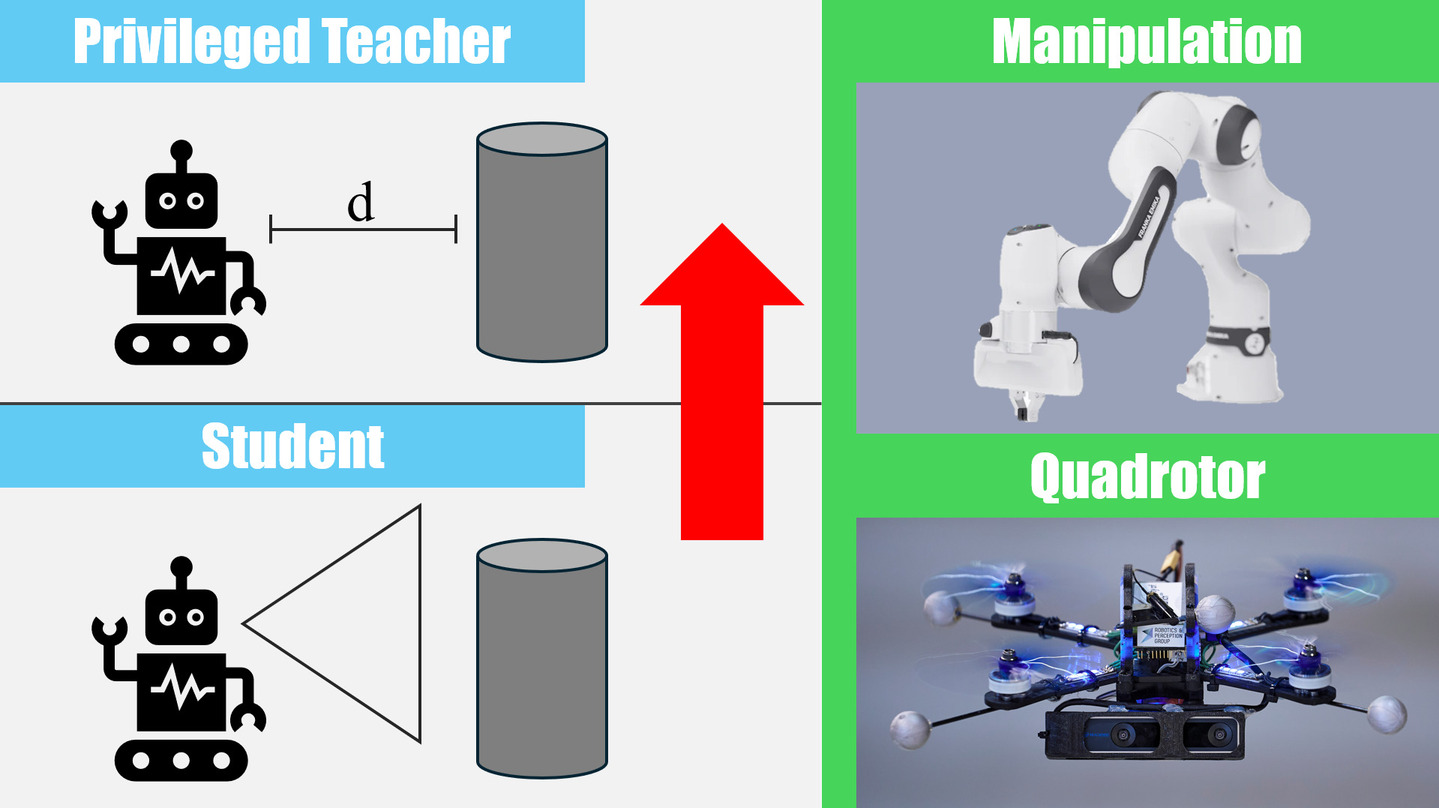

Imitation learning with a privileged teacher has proven effective for learning complex control behaviors from high-dimensional inputs, such as images. In this framework, a teacher is trained with privileged task information, while a student tries to predict the actions of the teacher with more limited observations, e.g., in a robot navigation task, the teacher might have access to distances to nearby obstacles, while the student only receives visual observations of the scene. However, privileged imitation learning faces a key challenge: the student might be unable to imitate the teacher's behavior due to partial observability. This problem arises because the teacher is trained without considering if the student is capable of imitating the learned behavior. To address this teacher-student asymmetry, we propose a framework for joint training of the teacher and student policies, encouraging the teacher to learn behaviors that can be imitated by the student despite the latters' limited access to information and its partial observability. Based on the performance bound in imitation learning, we add (i) the approximated action difference between teacher and student as a penalty term to the reward function of the teacher, and (ii) a supervised teacher-student alignment step. We motivate our method with a maze navigation task and demonstrate its effectiveness on complex vision-based quadrotor flight and manipulation tasks.

References

Student-Informed Teacher Training

International Conference on Learning Representations (ICLR), 2025.

Spotlight Presentation.

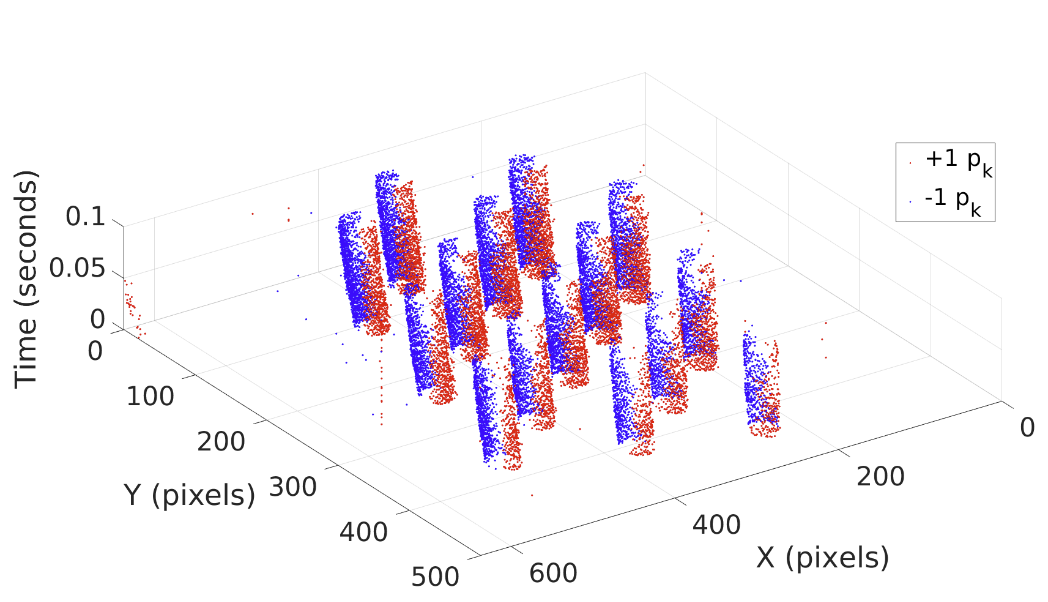

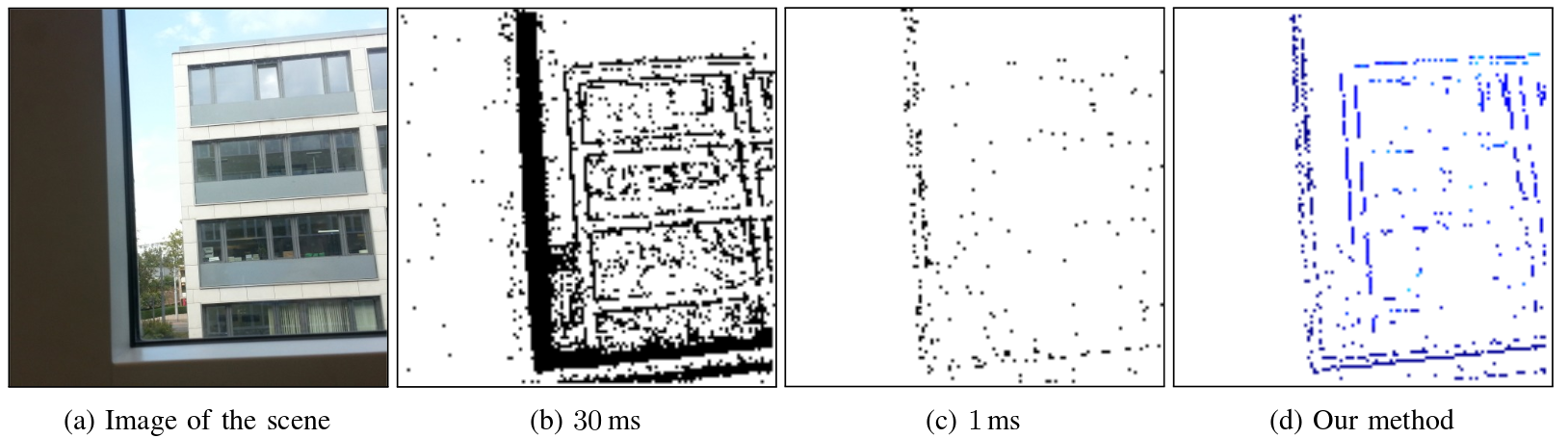

Data-driven Feature Tracking for Event Cameras with and without Frames

Because of their high temporal resolution, increased resilience to motion blur, and very sparse output, event cameras have been shown to be ideal for low-latency and low-bandwidth feature tracking, even in challenging scenarios. Existing feature tracking methods for event cameras are either handcrafted or derived from first principles but require extensive parameter tuning, are sensitive to noise, and do not generalize to different scenarios due to unmodeled effects. To tackle these deficiencies, we introduce the first data-driven feature tracker for event cameras, which leverages low-latency events to track features detected in an intensity frame. We achieve robust performance via a novel frame attention module, which shares information across feature tracks. Our tracker is designed to operate in two distinct configurations: solely with events or in a hybrid mode incorporating both events and frames. The hybrid model offers two setups: an aligned configuration where the event and frame cameras share the same viewpoint, and a hybrid stereo configuration where the event camera and the standard camera are positioned side by side. This side-by-side arrangement is particularly valuable as it provides depth information for each feature track, enhancing its utility in applications such as visual odometry and simultaneous localization and mapping.

References

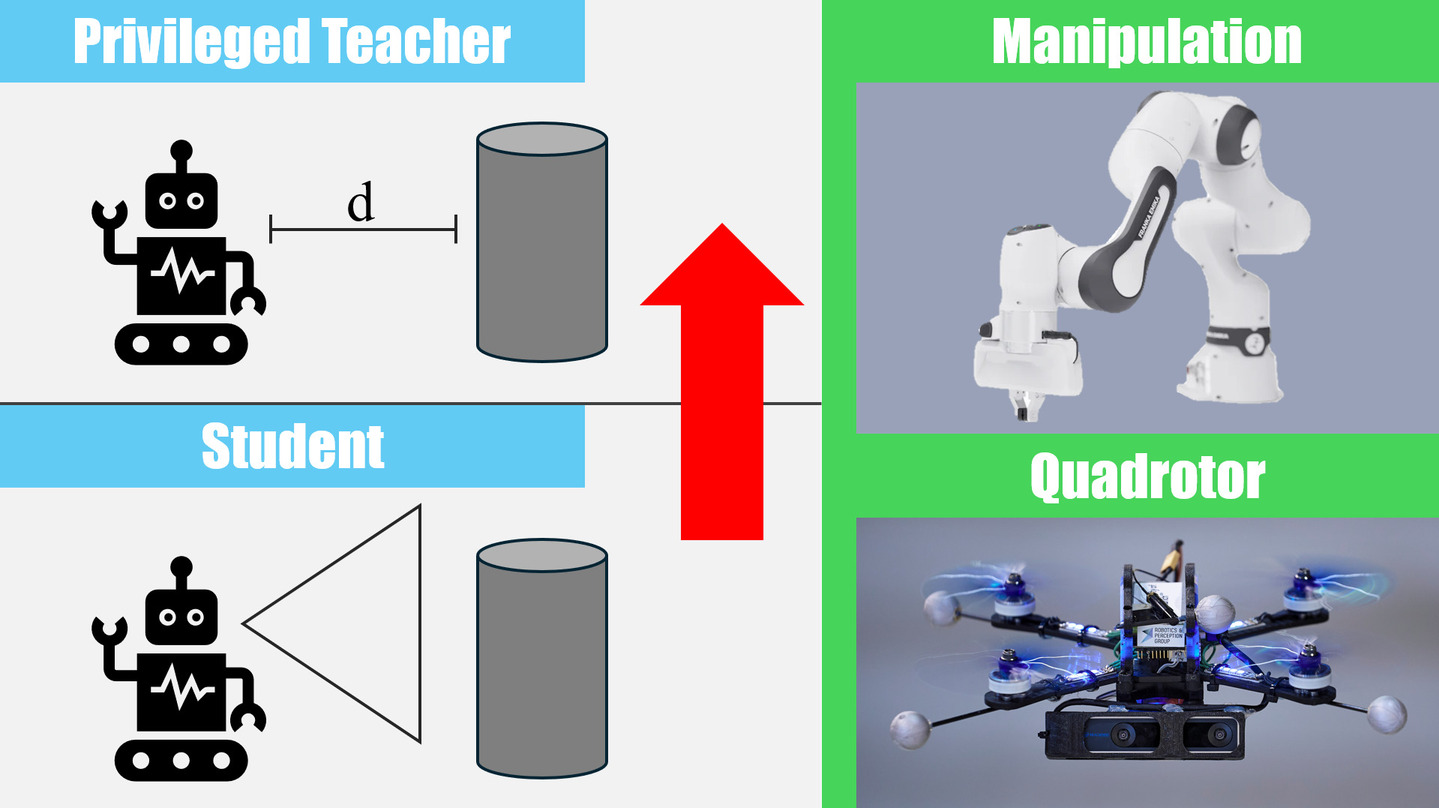

Code and Dataset for "Low Latency Automotive Vision with Event Cameras"

The computer vision algorithms used in today's advanced driver assistance systems rely on image-based RGB cameras, leading to a critical bandwidth-latency trade-off for delivering safe driving experiences. To address this, event cameras have emerged as alternative vision sensors. Event cameras measure changes in intensity asynchronously, offering high temporal resolution and sparsity, drastically reducing bandwidth and latency requirements. Despite these advantages, event camera-based algorithms are either highly efficient but lag behind image-based ones in terms of accuracy or sacrifice the sparsity and efficiency of events to achieve comparable results. To overcome this, we propose a novel hybrid event- and frame-based object detector that preserves the advantages of each modality and thus does not suffer from this tradeoff. Our method exploits the high temporal resolution and sparsity of events and the rich but low temporal resolution information in standard images to generate efficient, high-rate object detections, reducing perceptual and computational latency. We show that the use of a 20 Hz RGB camera plus an event camera can achieve the same latency as a 5,000 Hz camera with the bandwidth of a 45 Hz camera without compromising accuracy. Our approach paves the way for efficient and robust perception in edge-case scenarios by uncovering the potential of event cameras.

References

Low Latency Automotive Vision with Event Cameras

Nature, 2024.

PDF Open Access Code Training code Dataset Dataset Helper Tools YouTube

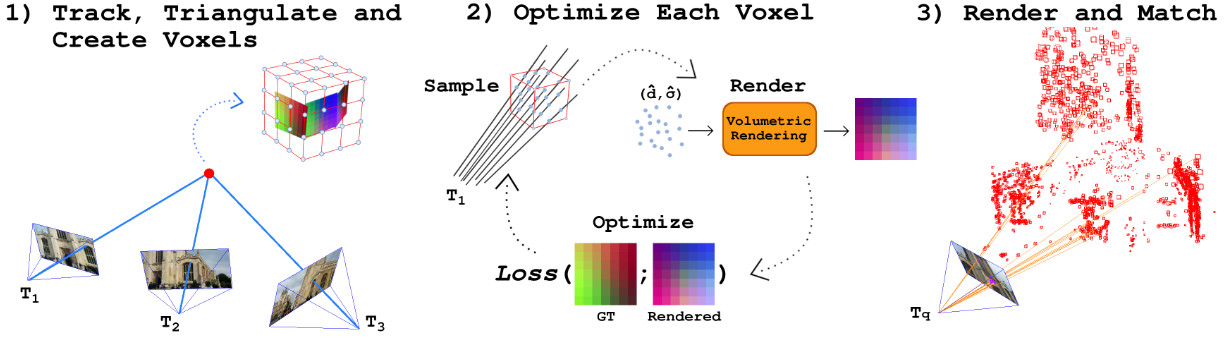

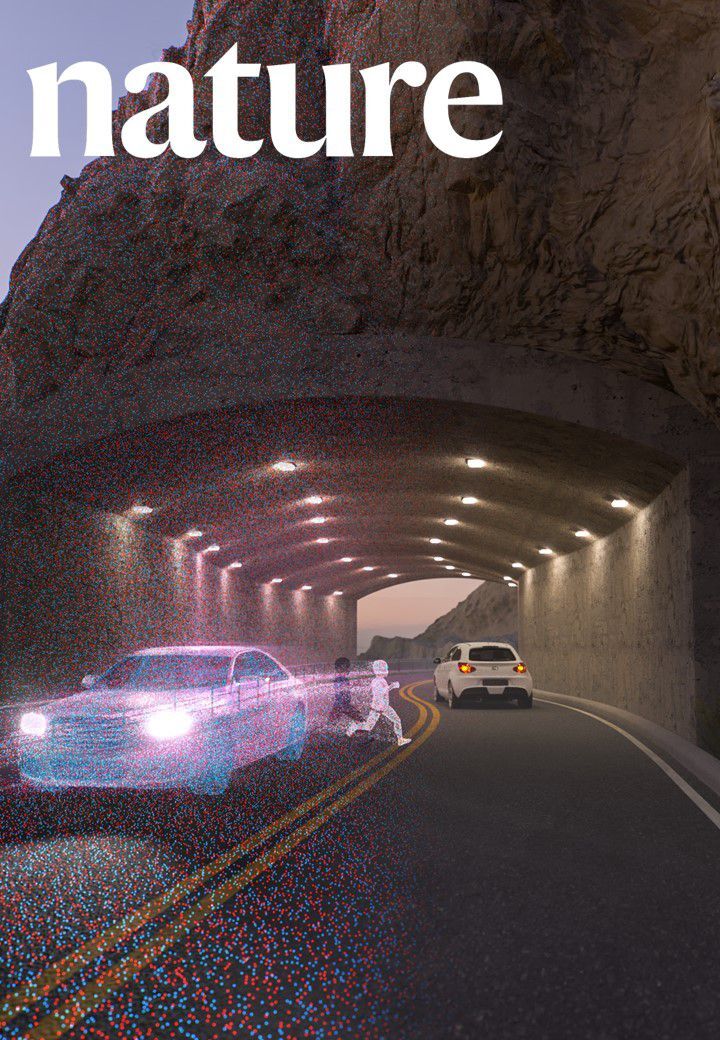

FaVoR: Features via Voxel Rendering for Camera Relocalization

Camera relocalization methods range from dense image alignment to direct camera pose regression from a query image. Among these, sparse feature matching stands out as an efficient, versatile, and generally lightweight approach with numerous applications. However, feature-based methods often struggle with significant viewpoint and appearance changes, leading to matching failures and inaccurate pose estimates. To overcome this limitation, we propose a novel approach that leverages a globally sparse yet locally dense 3D representation of 2D features. By tracking and triangulating landmarks over a sequence of frames, we construct a sparse voxel map optimized to render image patch descriptors observed during tracking. Given an initial pose estimate, we first synthesize descriptors from the voxels using volumetric rendering and then perform feature matching to estimate the camera pose. This methodology enables the generation of descriptors for unseen views, enhancing robustness to view changes. We extensively evaluate our method on the 7-Scenes and Cambridge Landmarks datasets. Our results show that our method significantly outperforms existing state-of-the-art feature representation techniques in indoor environments, achieving up to a 39% improvement in median translation error. Additionally, our approach yields comparable results to other methods for outdoor scenarios while maintaining lower memory and computational costs.

References

FaVoR: Features via Voxel Rendering for Camera Relocalization

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, Arizona, 2025.

GEM: A Generalizable Ego-Vision Multimodal World Model for Fine-Grained Ego-Motion, Object Dynamics, and Scene Composition Control

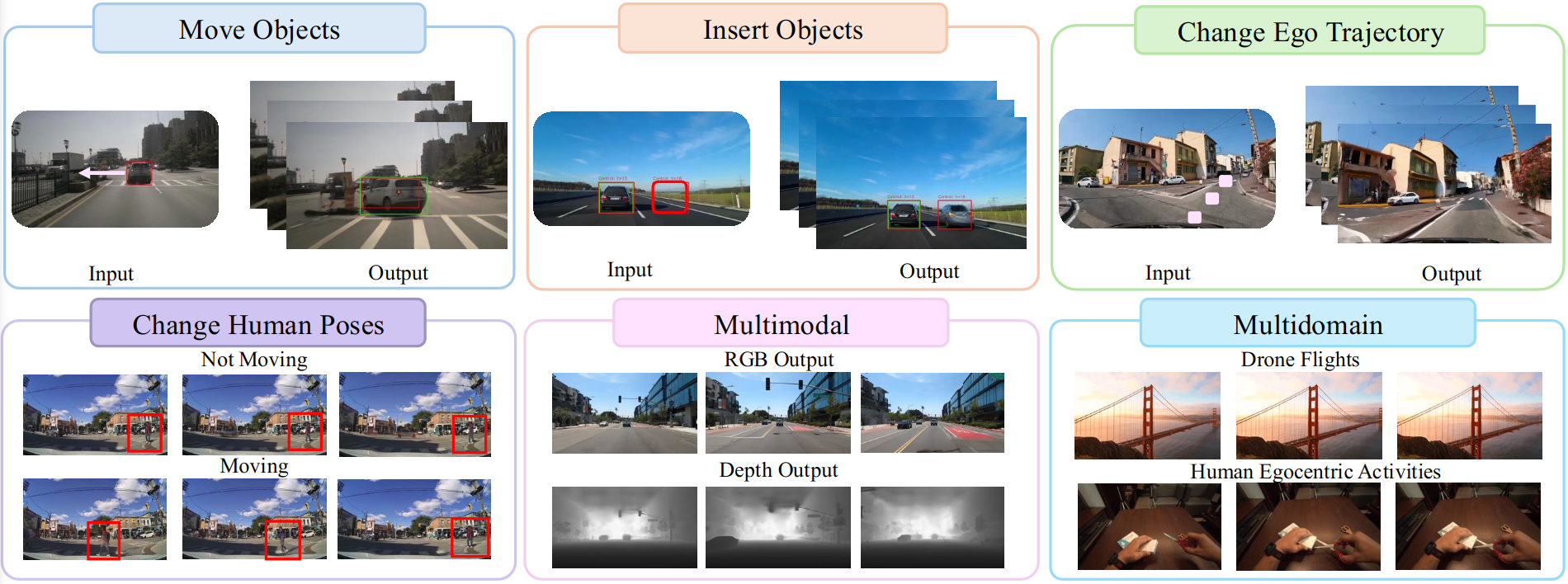

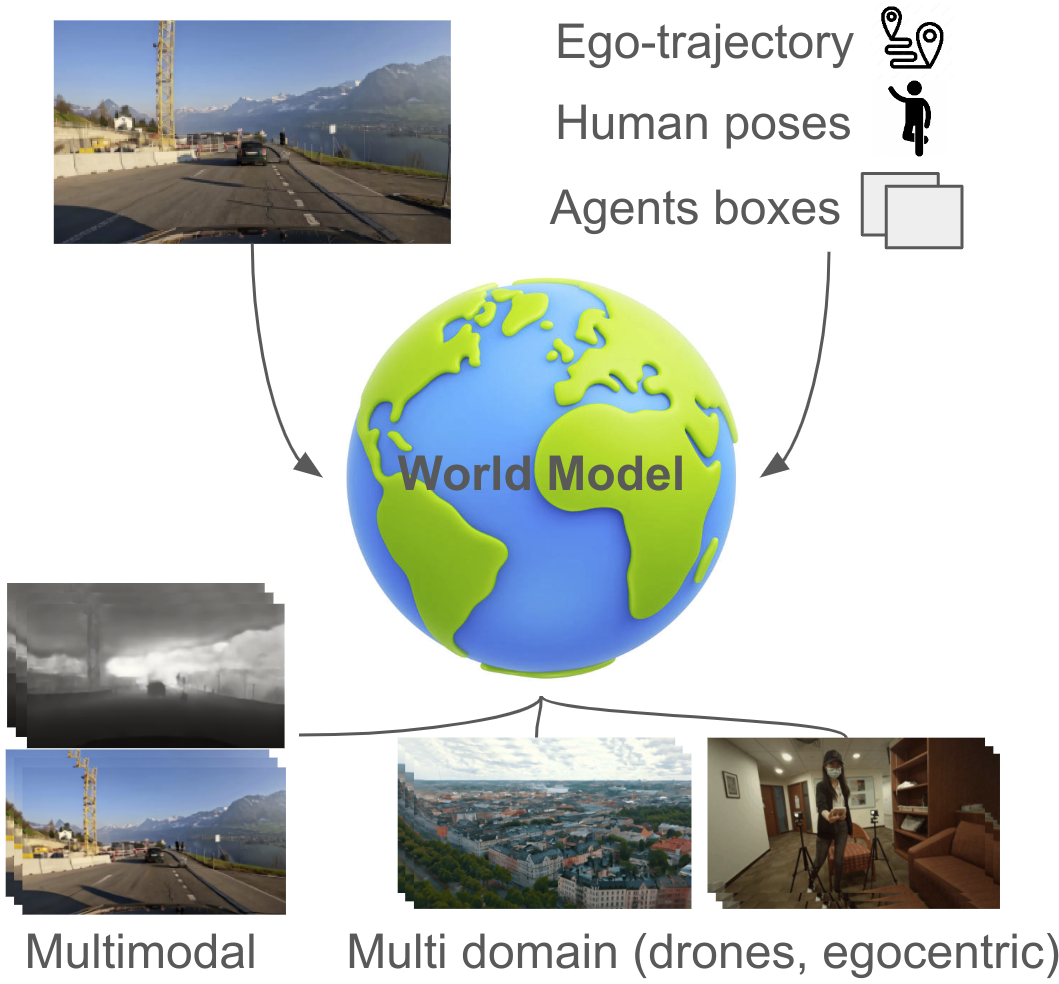

We present GEM, a Generalizable Ego-vision Multimodal world model that predicts future frames using a reference frame, sparse features, human poses, and ego- trajectories. Hence, our model has precise control over object dynamics, ego-agent motion and human poses. GEM generates paired RGB and depth outputs for richer spatial understanding. We introduce autoregressive noise schedules to enable stable long-horizon generations. Our dataset is comprised of 4000+ hours of multimodal data across domains like autonomous driving, egocentric human activities, and drone flights. Pseudo-labels are used to get depth maps, egotrajectories, and human poses. We use a comprehensive evaluation framework, including a new Control of Object Manipulation (COM) metric, to assess controllability. Experiments show GEM excels at generating diverse, controllable scenarios and temporal consistency over long generations.

References

GEM: A Generalizable Ego-Vision Multimodal World Model for Fine-Grained Ego-Motion, Object Dynamics, and Scene Composition Control

ArXiv, 2024.

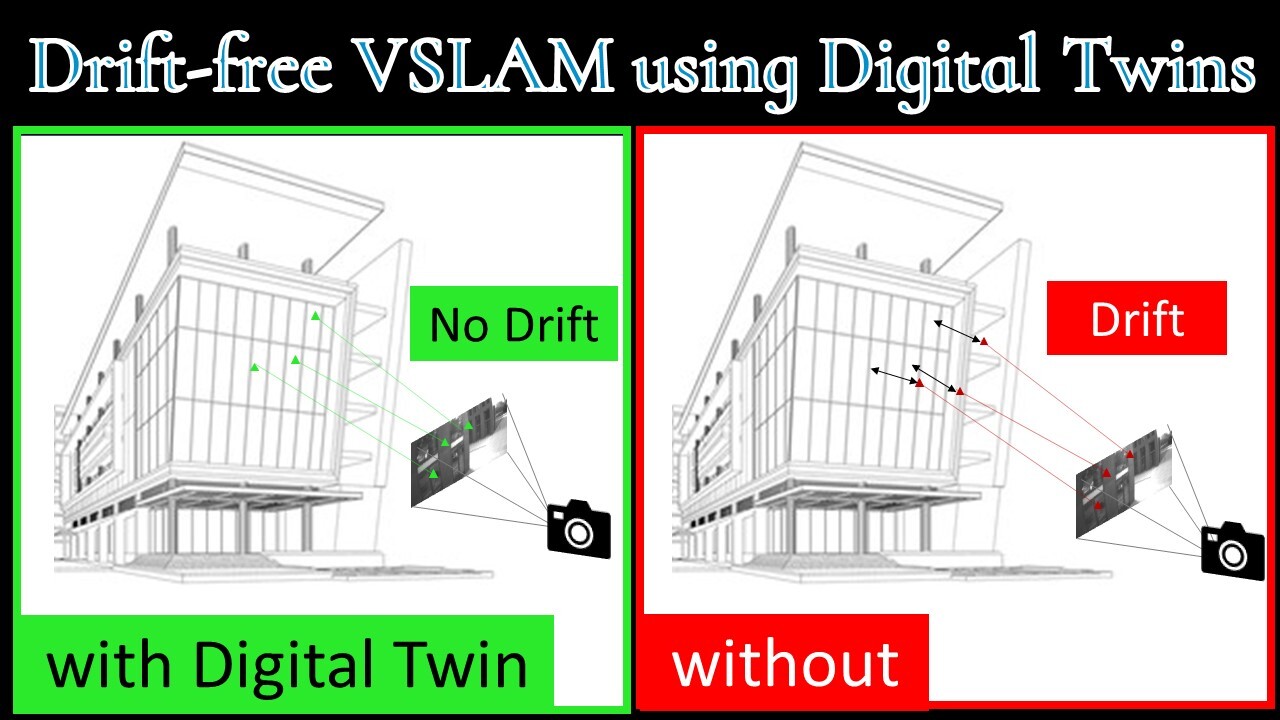

Drift-free Visual SLAM using Digital Twins

Globally-consistent localization in urban environments is crucial for autonomous systems such as self-driving vehicles and drones, as well as assistive technologies for visually impaired people. Traditional Visual-Inertial Odometry (VIO) and Visual Simultaneous Localization and Mapping (VSLAM) methods, though adequate for local pose estimation, suffer from drift in the long term due to reliance on local sensor data. While GPS counteracts this drift, it is unavailable indoors and often unreliable in urban areas. An alternative is to localize the camera to an existing 3D map using visual-feature matching. This can provide centimeter-level accurate localization but is limited by the visual similarities between the current view and the map. This paper introduces a novel approach that achieves accurate and globally-consistent localization by aligning the sparse 3D point cloud generated by the VIO/VSLAM system to a digital twin using point-to-plane matching; no visual data association is needed. The proposed method provides a 6-DoF global measurement tightly integrated into the VIO/VSLAM system. Experiments run on a high-fidelity GPS simulator and real-world data collected from a drone demonstrate that our approach outperforms state-of-the-art VIO-GPS systems and offers superior robustness against viewpoint changes compared to the state-of-the-art Visual SLAM systems.

References

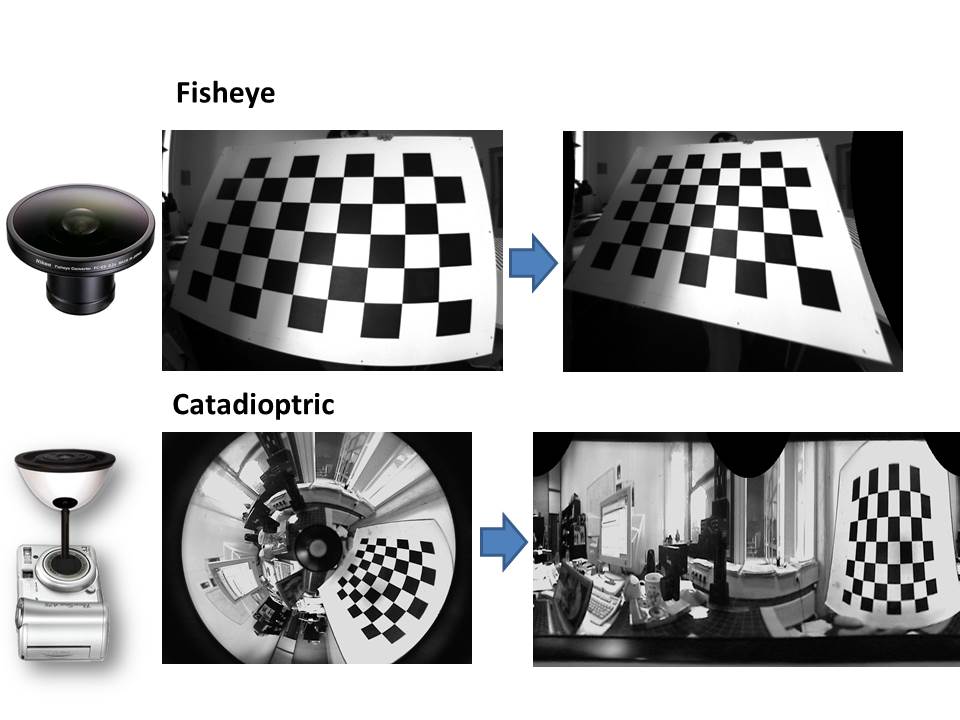

E-Calib: A Fast, Robust and Accurate Calibration Toolbox for Event Cameras

Event cameras triggered a paradigm shift in the computer vision community delineated by their asynchronous nature, low latency, and high dynamic range. Calibration of event cameras is always essential to account for the sensor intrinsic parameters and for 3D perception. However, conventional image-based calibration techniques are not applicable due to the asynchronous, binary output of the sensor. The current standard for calibrating event cameras relies on either blinking patterns or event-based image reconstruction algorithms. These approaches are difficult to deploy in factory settings and are affected by noise and artifacts degrading the calibration performance. To bridge these limitations, we present E-Calib, a novel, fast, robust, and accurate calibration toolbox for event cameras utilizing the asymmetric circle grid, for its robustness to out-of-focus scenes. The proposed method is tested in a variety of rigorous experiments for different event camera models, on circle grids with different geometric properties, and under challenging illumination conditions. The results show that our approach outperforms the state-of-the-art in detection success rate, reprojection error, and estimation accuracy of extrinsic parameters.

References

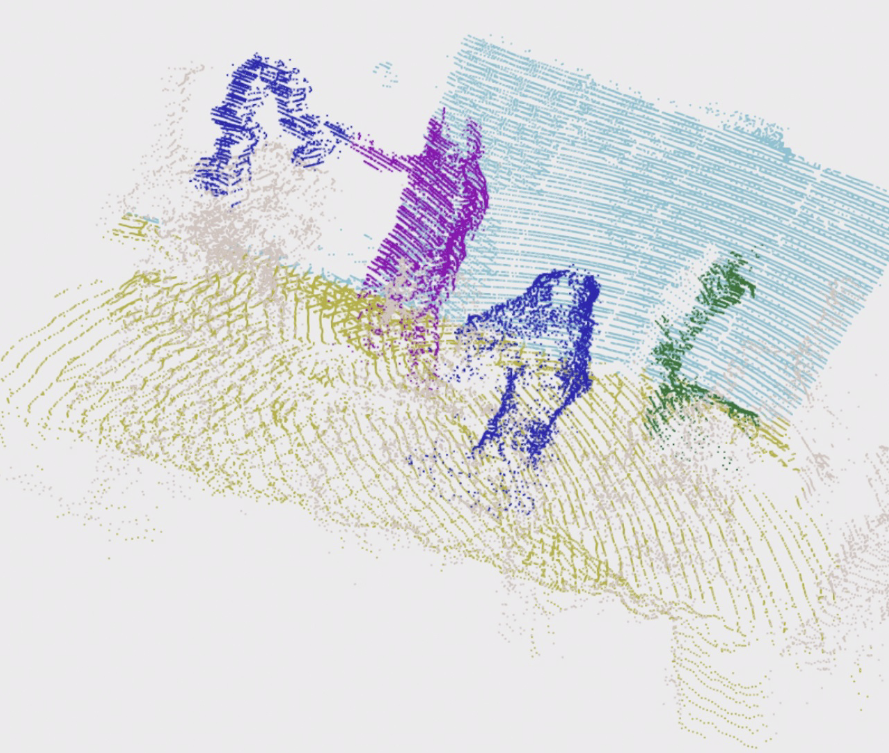

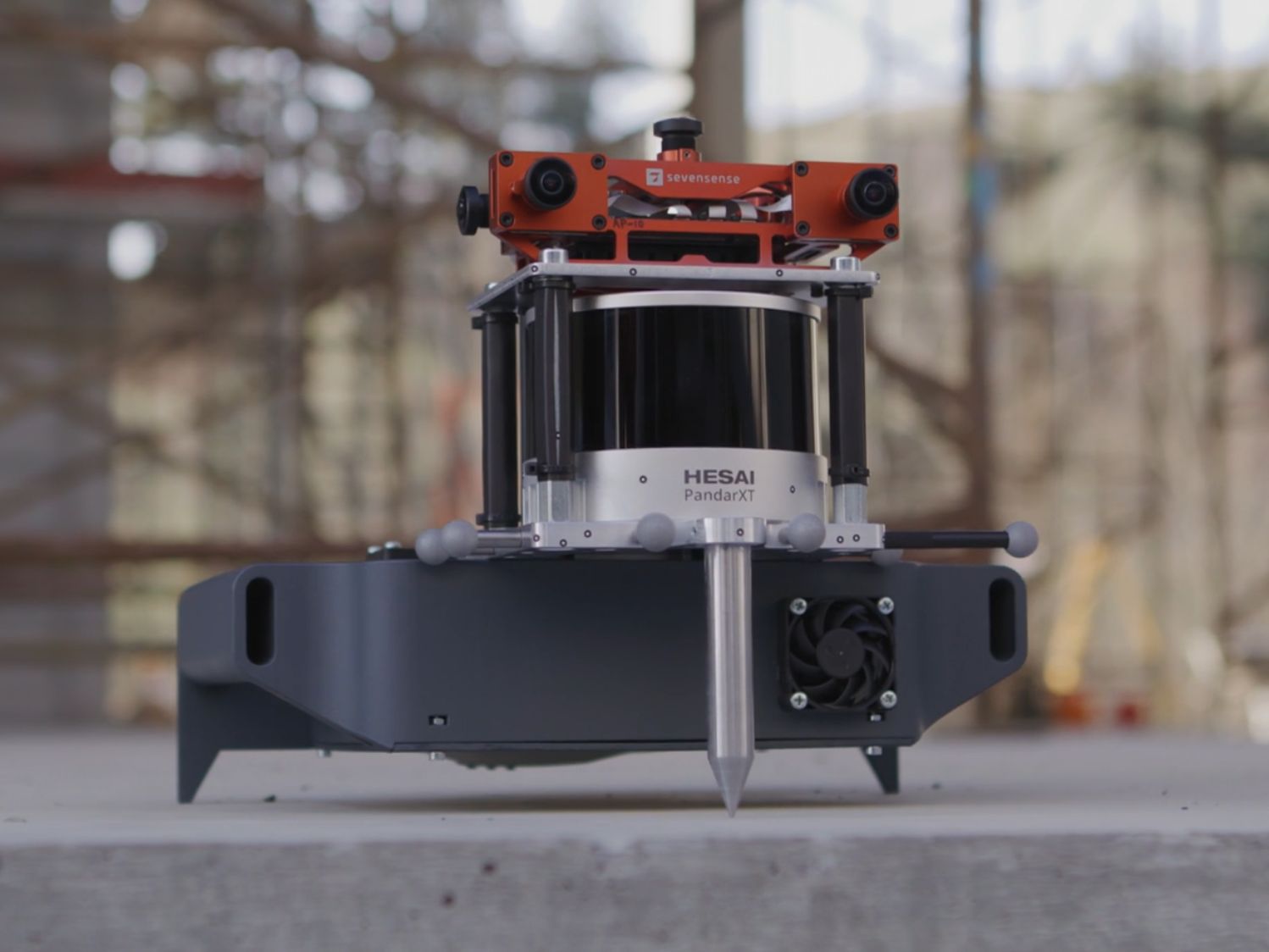

COVERED, CollabOratiVE Robot Environment Dataset for 3D Semantic segmentation

Safe human-robot collaboration (HRC) has recently gained a lot of interest with the emerging Industry 5.0 paradigm. Conventional robots are being replaced with more intelligent and flexible collaborative robots (cobots). Safe and efficient collaboration between cobots and humans largely relies on the cobot's comprehensive semantic understanding of the dynamic surrounding of industrial environments. Despite the importance of semantic understanding for such applications, 3D semantic segmentation of collaborative robot workspaces lacks sufficient research and dedicated datasets. The performance limitation caused by insufficient datasets is called 'data hunger' problem. To overcome this current limitation, this work develops a new dataset specifically designed for this use case, named "COVERED", which includes point-wise annotated point clouds of a robotic cell. Lastly, we also provide a benchmark of current state-of-the-art (SOTA) algorithm performance on the dataset and demonstrate a real-time semantic segmentation of a collaborative robot workspace using a multi- LiDAR system. The promising results from using the trained Deep Networks on a real-time dynamically changing situation shows that we are on the right track. Our perception pipeline achieves 20Hz throughput with a prediction point accuracy of >96\% and >92\% mean intersection over union (mIOU) while maintaining an 8Hz throughput.

References

Hilti SLAM Challenge 2023: Benchmarking Single + Multi-session SLAM across Sensor Constellations in Construction

Simultaneous Localization and Mapping systems are a key enabler for positioning in both handheld and robotic applications. The Hilti SLAM Challenges organized over the past years have been successful at benchmarking some of the world's best SLAM Systems with high accuracy. However, more capabilities of these systems are yet to be explored, such as platform agnosticism across varying sensor suites and multi-session SLAM. These factors indirectly serve as an indicator of robustness and ease of deployment in real-world applications. There exists no dataset plus benchmark combination publicly available, which considers these factors combined. The Hilti SLAM Challenge 2023 Dataset and Benchmark addresses this issue. Additionally, we propose a novel fiducial marker design for a pre- surveyed point on the ground to be observable from an off-the-shelf LiDAR mounted on a robot, and an algorithm to estimate its position at mm-level accuracy. Results from the challenge show an increase in overall participation, single-session SLAM systems getting increasingly accurate, successfully operating across varying sensor suites, but relatively few participants performing multi-session SLAM.

References

E-NeRF: Neural Radiance Fields from a Moving Event Camera

Estimating neural radiance fields (NeRFs) from "ideal" images has been extensively studied in the computer vision community. Most approaches assume optimal illumination and slow camera motion. These assumptions are often violated in robotic applications, where images may contain motion blur, and the scene may not have suitable illumination. This can cause significant problems for downstream tasks such as navigation, inspection, or visualization of the scene. To alleviate these problems, we present E-NeRF, the first method which estimates a volumetric scene representation in the form of a NeRF from a fast-moving event camera. Our method can recover NeRFs during very fast motion and in high-dynamic-range conditions where frame-based approaches fail. We show that rendering high-quality frames is possible by only providing an event stream as input. Furthermore, by combining events and frames, we can estimate NeRFs of higher quality than state-of-the-art approaches under severe motion blur. We also show that combining events and frames can overcome failure cases of NeRF estimation in scenarios where only a few input views are available without requiring additional regularization.

References

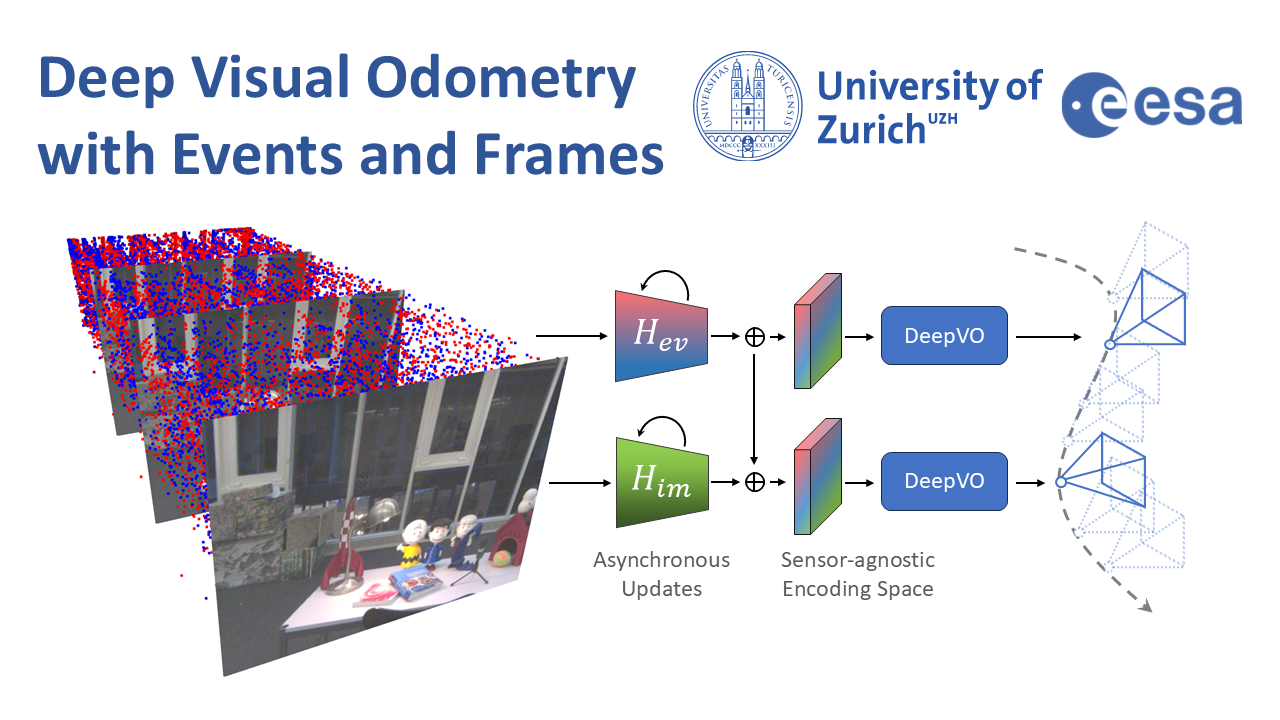

End-to-End Learned Event- and Image-based Visual Odometry

Visual Odometry (VO) is crucial for autonomous robotic navigation, especially in GPS-denied environments like planetary terrains. To improve robustness, recent model-based VO systems have begun combining standard and event-based cameras. While event cameras excel in low-light and high-speed motion, standard cameras provide dense and easier-to-track features. However, the field of image- and event-based VO still predominantly relies on model-based methods and is yet to fully integrate recent image-only advancements leveraging end-to-end learning-based architectures. Seamlessly integrating the two modalities remains challenging due to their different nature, one asynchronous, the other not, limiting the potential for a more effective image- and event-based VO. We introduce RAMP-VO, the first end-to-end learned image- and event-based VO system. It leverages novel Recurrent, Asynchronous, and Massively Parallel (RAMP) encoders capable of fusing asynchronous events with image data, providing 8x faster inference and 33% more accurate predictions than existing solutions. Despite being trained only in simulation, RAMP-VO outperforms previous methods on the newly introduced Apollo and Malapert datasets, and on existing benchmarks, where it improves image- and event-based methods by 58.8% and 30.6%, paving the way for robust and asynchronous VO in space.

References

Deep Visual Odometry with Events and Frames

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024.

Reinforcement Learning Meets Visual Odometry

Visual Odometry (VO) is essential to downstream mobile robotics and augmented/virtual reality tasks. Despite recent advances, existing VO methods still rely on heuristic design choices that require several weeks of hyperparameter tuning by human experts, hindering generalizability and robustness. We address these challenges by reframing VO as a sequential decision-making task and applying Reinforcement Learning (RL) to adapt the VO process dynamically. Our approach introduces a neural network, operating as an agent within the VO pipeline, to make decisions such as keyframe and grid-size selection based on real-time conditions. Our method minimizes reliance on heuristic choices using a reward function based on pose error, runtime, and other metrics to guide the system. Our RL framework treats the VO system and the image sequence as an environment, with the agent receiving observations from keypoints, map statistics, and prior poses. Experimental results using classical VO methods and public benchmarks demonstrate improvements in accuracy and robustness, validating the generalizability of our RL-enhanced VO approach to different scenarios. We believe this paradigm shift advances VO technology by eliminating the need for time-intensive parameter tuning of heuristics.

References

A Hybrid ANN-SNN Architecture for Low-Power and Low-Latency Visual Perception

Spiking Neural Networks (SNN) are a class of bioinspired neural networks that promise to bring low-power and low-latency inference to edge-devices through the use of asynchronous and sparse processing. However, being temporal models, SNNs depend heavily on expressive states to generate predictions on par with classical artificial neural networks (ANNs). These states converge only after long transient time periods, and quickly decay in the absence of input data, leading to higher latency, power consumption, and lower accuracy. In this work, we address this issue by initializing the state with an auxiliary ANN running at a low rate. The SNN then uses the state to generate predictions with high temporal resolution until the next initialization phase. Our hybrid ANN-SNN model thus combines the best of both worlds: It does not suffer from long state transients and state decay thanks to the ANN, and can generate predictions with high temporal resolution, low latency, and low power thanks to the SNN. We show for the task of eventbased 2D and 3D human pose estimation that our method consumes 88% less power with only a 4% decrease in performance compared to its fully ANN counterparts when run at the same inference rate. Moreover, when compared to SNNs, our method achieves a 74% lower error. This research thus provides a new understanding of how ANNs and SNNs can be used to maximize their respective benefits.

References

State Space Models for Event Cameras

Today, state-of-the-art deep neural networks that process event-camera data first convert a temporal window of events into dense, grid-like input representations. As such, they exhibit poor generalizability when deployed at higher inference frequencies (i.e., smaller temporal windows) than the ones they were trained on. We address this challenge by introducing state-space models (SSMs) with learnable timescale parameters to event-based vision. This design adapts to varying frequencies without the need to retrain the network at different frequencies. Additionally, we investigate two strategies to counteract aliasing effects when deploying the model at higher frequencies. We comprehensively evaluate our approach against existing methods based on RNN and Transformer architectures across various benchmarks, including Gen1 and 1 Mpx event camera datasets. Our results demonstrate that SSM-based models train 33% faster and also exhibit minimal performance degradation when tested at higher frequencies than the training input. Traditional RNN and Transformer models exhibit performance drops of more than 20 mAP, with SSMs having a drop of 3.76 mAP, highlighting the effectiveness of SSMs in event-based vision tasks.

References

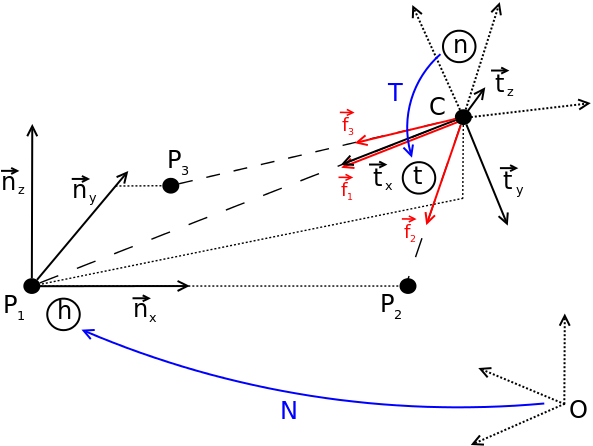

An N-Point Linear Solver for Line and Motion Estimation with Event Cameras

Event cameras respond primarily to edges-formed by strong gradients-and are thus particularly well-suited for line-based motion estimation. Recent work has shown that events generated by a single line each satisfy a polynomial constraint which describes a manifold in the space-time volume. Multiple such constraints can be solved simultaneously to recover the partial linear velocity and line parameters. In this work, we show that, with a suitable line parametrization, this system of constraints is actually linear in the unknowns, which allows us to design a novel linear solver. Unlike existing solvers, our linear solver (i) is fast and numerically stable since it does not rely on expensive root finding, (ii) can solve both minimal and overdetermined systems with more than 5 events (i.e. N >= 5), and (iii) admits the characterization of all degenerate cases and multiple solutions. The found line parameters are singularity-free and have a fixed scale, which eliminates the need for auxiliary constraints typically encountered in previous work. To recover the full linear camera velocity we fuse observations from multiple lines with a novel velocity averaging scheme that relies on a geometrically-motivated residual, and thus solves the problem more efficiently than previous schemes which minimize an algebraic residual. Extensive experiments in synthetic and real-world settings demonstrate that our method surpasses the previous work in numerical stability, and operates over 600 times faster.

References

An N-Point Linear Solver for Line and Motion Estimation with Event Cameras

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, 2024.

Oral Presentation.

Mitigating Motion Blur in Neural Radiance Fields with Events and Frames

Neural Radiance Fields (NeRFs) have shown great potential in novel view synthesis. However, they struggle to render sharp images when the data used for training is affected by motion blur. On the other hand, event cameras excel in dynamic scenes as they measure brightness changes with microsecond resolution and are thus only marginally affected by blur. Recent methods attempt to enhance NeRF reconstructions under camera motion by fusing frames and events. However, they face challenges in recovering accurate color content or constrain the NeRF to a set of predefined camera poses, harming reconstruction quality in challenging conditions. This paper proposes a novel formulation addressing these issues by leveraging both model- and learning-based modules. We explicitly model the blur formation process, exploiting the event double integral as an additional model-based prior. Additionally, we model the event-pixel response using an end-to-end learnable response function, allowing our method to adapt to non-idealities in the real event-camera sensor. We show, on synthetic and real data, that the proposed approach outperforms existing deblur NeRFs that use only frames as well as those that combine frames and events by +6.13dB and +2.48dB, respectively.

References

Mitigating Motion Blur in Neural Radiance Fields with Events and Frames

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, 2024.

Contrastive Initial State Buffer for Reinforcement Learning

In Reinforcement Learning, the trade-off between exploration and exploitation poses a complex challenge for achieving efficient learning from limited samples. While recent works have been effective in leveraging past experiences for policy updates, they often overlook the potential of reusing past experiences for data collection. Independent of the underlying RL algorithm, we introduce the concept of a Contrastive Initial State Buffer, which strategically selects states from past experiences and uses them to initialize the agent in the environment in order to guide it toward more informative states. We validate our approach on two complex robotic tasks without relying on any prior information about the environment: (i) locomotion of a quadruped robot traversing challenging terrains and (ii) a quadcopter drone racing through a track. The experimental results show that our initial state buffer achieves higher task performance than the nominal baseline while also speeding up training convergence.

References

Dense Continuous-Time Optical Flow from Events and Frames

We present a method for estimating dense continuous-time optical flow. Traditional dense optical flow methods compute the pixel displacement between two images. Due to missing information, these approaches cannot recover the pixel trajectories in the blind time between two images. In this work, we show that it is possible to compute per-pixel, continuous-time optical flow by additionally using events from an event camera. Events provide temporally fine-grained information about movement in image space due to their asynchronous nature and microsecond response time. We leverage these benefits to predict pixel trajectories densely in continuous-time via parameterized Bezier curves. To achieve this, we introduce multiple innovations to build a neural network with strong inductive biases for this task: First, we build multiple sequential correlation volumes in time using event data. Second, we use Bezier curves to index these correlation volumes at multiple timestamps along the trajectory. Third, we use the retrieved correlation to update the Bezier curve representations iteratively. Our method can optionally include image pairs to boost performance further. The proposed approach outperforms existing image-based and event-based methods by 11.5 % lower EPE on DSEC-Flow. Finally, we introduce a novel synthetic dataset MultiFlow for pixel trajectory regression on which our method is currently the only successful approach.

References

Dense Continuous-Time Optical Flow from Events and Frames

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2024.

Revisiting Token Pruning for Object Detection and Instance Segmentation

Vision Transformers (ViTs) have shown impressive performance in computer vision, but their high computational cost, quadratic in the number of tokens, limits their adoption in computation-constrained applications. However, this large number of tokens may not be necessary, as not all tokens are equally important. In this paper, we investigate token pruning to accelerate inference for object detection and instance segmentation, extending prior works from image classification. Through extensive experiments, we offer four insights for dense tasks: (i) tokens should not be completely pruned and discarded, but rather preserved in the feature maps for later use. (ii) reactivating previously pruned tokens can further enhance model performance. (iii) a dynamic pruning rate based on images is better than a fixed pruning rate. (iv) a lightweight, 2-layer MLP can effectively prune tokens, achieving accuracy comparable with complex gating networks with a simpler design. We evaluate the impact of these design choices on COCO dataset and present a method integrating these insights that outperforms prior art token pruning models, significantly reducing performance drop from ~1.5 mAP to ~0.3 mAP for both boxes and masks. Compared to the dense counterpart that uses all tokens, our method achieves up to 34% faster inference speed for the whole network and 46% for the backbone.

References

From Chaos Comes Order: Ordering Event Representations for Object Recognition and Detection

Selecting dense event representations for deep neural networks is exceedingly slow since it involves training a neural network for each representation and selecting the best one based on the validation score. In this work, we eliminate this bottleneck by selecting the representation based on the Gromov-Wasserstein Discrepancy (GWD) on the validation set. This metric is 200 times faster to compute and preserves the task performance ranking of event representations across multiple representations, network backbones, datasets and tasks. We use it to, for the first time, perform a hyperparameter search on a large family of event representations, revealing new and powerful event representations that exceed the state-of-the-art. Our optimized representations outperform existing representations by 1.7 mAP on the 1 Mpx dataset and 0.3 mAP on the Gen1 dataset, two established object detection benchmarks, and reach a 3.8% higher classification score on the mini N-ImageNet benchmark. Moreover, we outperform state-of-the-art by 2.1 mAP on Gen1 and state-of-the-art feed-forward methods by 6.0 mAP on the 1 Mpx datasets. This work opens a new unexplored field of explicit representation optimization for event-based learning.

References

Autonomous Power Line Inspection with Drones via Perception-Aware MPC

Drones have the potential to revolutionize power line inspection by increasing productivity, reducing inspection time, improving data quality, and eliminating the risks for human operators. Current state-of-the-art systems for power line inspection have two shortcomings: (i) control is decoupled from perception and needs accurate information about the location of the power lines and masts; (ii) collision avoidance is decoupled from the power line tracking, which results in poor tracking in the vicinity of the power masts, and, consequently, in decreased data quality for visual inspection. In this work, we propose a model predictive controller (MPC) that overcomes these limitations by tightly coupling perception and action. Our controller generates commands that maximize the visibility of the power lines while, at the same time, safely avoiding the power masts. For power line detection, we propose a lightweight learning-based detector that is trained only on synthetic data and is able to transfer zero-shot to real-world power line images. We validate our system in simulation and real-world experiments on a mock-up power line infrastructure. We release the code and dataset open-source.

References

Champion-level Drone Racing using Deep Reinforcement Learning

First-person view (FPV) drone racing is a televised sport in which professional competitors pilot high-speed aircraft through a three-dimensional circuit. Each pilot sees the environment from their drone's perspective via video streamed from an onboard camera. Reaching the level of professional pilots with an autonomous drone is challenging since the robot needs to fly at its physical limits while estimating its speed and location in the circuit exclusively from onboard sensors. Here we introduce Swift, an autonomous system that can race physical vehicles at the level of the human world champions. The system combines deep reinforcement learning in simulation with data collected in the physical world. Swift competed against three human champions, including the world champions of two international leagues, in real-world head-to-head races. Swift won multiple races against each of the human champions and demonstrated the fastest recorded race time. This work represents a milestone for mobile robotics and machine intelligence, which may inspire the deployment of hybrid learning-based solutions in other physical systems.

References

Champion-level Drone Racing using Deep Reinforcement Learning

Nature, 2023

Active Exposure Control for Robust Visual Odometry in HDR Environments

We propose an active exposure control method to improve the robustness of visual odometry in HDR (high dynamic range) environments. Our method evaluates the proper exposure time by maximizing a robust gradient-based image quality metric. The optimization is achieved by exploiting the photometric response function of the camera. Our exposure control method is evaluated in different real world environments and outperforms the built-in auto-exposure function of the camera. To validate the benefit of our approach, we adapt a state-of-the-art visual odometry pipeline (SVO) to work with varying exposure time and demonstrate improved performance using our exposure control method in challenging HDR environments. We release the code open-source.

References

Real-time Neural MPC: Deep Learning Model Predictive Control for Quadrotors and Agile Robotic Platforms

Model Predictive Control (MPC) has become a popular framework in embedded control for high-performance autonomous systems. However, to achieve good control performance using MPC, an accurate dynamics model is key. To maintain real-time operation, the dynamics models used on embedded systems have been limited to simple first-principle models, which substantially limits their representative power. In contrast to such simple models, machine learning approaches, specifically neural networks, have been shown to accurately model even complex dynamic effects, but their large computational complexity hindered combination with fast real-time iteration loops. With this work, we present Real-time Neural MPC, a framework to efficiently integrate large, complex neural network architectures as dynamics models within a model-predictive control pipeline. Our experiments, performed in simulation and the real world onboard a highly agile quadrotor platform, demonstrate the capabilities of the described system to run learned models with, previously infeasible, large modeling capacity using gradient-based online optimization MPC. Compared to prior implementations of neural networks in online optimization MPC we can leverage models of over 4000 times larger parametric capacity in a 50Hz real-time window on an embedded platform. Further, we show the feasibility of our framework on real-world problems by reducing the positional tracking error by up to 82% when compared to state-of-the-art MPC approaches without neural network dynamics.

References

Cracking Double-Blind Review: Authorship Attribution with Deep Learning

Double-blind peer review is considered a pillar of academic research because it is perceived to ensure a fair, unbiased, and fact-centered scientific discussion. Yet, experienced researchers can often correctly guess from which research group an anonymous submission originates, biasing the peer-review process. In this work, we present a transformer-based, neural-network architecture that only uses the text content and the author names in the bibliography to attribute an anonymous manuscript to an author. To train and evaluate our method, we created the largest authorship-identification dataset to date. It leverages all research papers publicly available on arXiv amounting to over 2 million manuscripts. In arXiv-subsets with up to 2,000 different authors, our method achieves an unprecedented authorship attribution accuracy, where up to 73% of papers are attributed correctly. We present a scaling analysis to highlight the applicability of the proposed method to even larger datasets when sufficient compute capabilities are more widely available to the academic community. Furthermore, we analyze the attribution accuracy in settings where the goal is to identify all authors of an anonymous manuscript. Thanks to our method, we are not only able to predict the author of an anonymous work but we also provide empirical evidence of the key aspects that make a paper attributable. We have open-sourced the necessary tools to reproduce our experiments.

References

Microgravity induces overconfidence in perceptual decision-making

Does gravity affect decision-making? This question comes into sharp focus as plans for interplanetary human space missions solidify. In the framework of Bayesian brain theories, gravity encapsulates a strong prior, anchoring agents to a reference frame via the vestibular system, informing their decisions and possibly their integration of uncertainty. What happens when such a strong prior is altered? We address this question using a self-motion estimation task in a space analog environment under conditions of altered gravity. Two participants were cast as remote drone operators orbiting Mars in a virtual reality environment on board a parabolic flight, where both hyper- and microgravity conditions were induced. From a first-person perspective, participants viewed a drone exiting a cave and had to first predict a collision and then provide a confidence estimate of their response. We evoked uncertainty in the task by manipulating the motion's trajectory angle. Post-decision subjective confidence reports were negatively predicted by stimulus uncertainty, as expected. Uncertainty alone did not impact overt behavioral responses (performance, choice) differentially across gravity conditions. However microgravity predicted higher subjective confidence, especially in interaction with stimulus uncertainty. These results suggest that variables relating to uncertainty affect decision-making distinctly in microgravity, highlighting the possible need for automatized, compensatory mechanisms when considering human factors in space research.

References

Recurrent Vision Transformers for Object Detection with Event Cameras

We present Recurrent Vision Transformers (RVTs), a novel backbone for object detection with event cameras. Event cameras provide visual information with sub-millisecond latency at a high-dynamic range and with strong robustness against motion blur. These unique properties offer great potential for low-latency object detection and tracking in time-critical scenarios. Prior work in event-based vision has achieved outstanding detection performance but at the cost of substantial inference time, typically beyond 40 milliseconds. By revisiting the high-level design of recurrent vision backbones, we reduce inference time by a factor of 5 while retaining similar performance. To achieve this, we explore a multi-stage design that utilizes three key concepts in each stage: First, a convolutional prior that can be regarded as a conditional positional embedding. Second, local- and dilated global self-attention for spatial feature interaction. Third, recurrent temporal feature aggregation to minimize latency while retaining temporal information. RVTs can be trained from scratch to reach state-of-the-art performance on event-based object detection - achieving an mAP of 47.2% on the Gen1 automotive dataset. At the same time, RVTs offer fast inference (12 ms on a T4 GPU) and favorable parameter efficiency (5 times fewer than prior art). Our study brings new insights into effective design choices that could be fruitful for research beyond event-based vision.

References

Hilti-Oxford Dataset: A Millimetre-Accurate Benchmark for Simultaneous Localization and Mapping

References

Training Efficient Controllers via Analytic Policy Gradient

Control design for robotic systems is complex and often requires solving an optimization to follow a trajectory accurately. Online optimization approaches like Model Predictive Control (MPC) have been shown to achieve great tracking performance, but require high computing power. Conversely, learning-based offline optimization approaches, such as Reinforcement Learning (RL), allow fast and efficient execution on the robot but hardly match the accuracy of MPC in trajectory tracking tasks. In systems with limited compute, such as aerial vehicles, an accurate controller that is efficient at execution time is imperative. We propose an Analytic Policy Gradient (APG) method to tackle this problem. APG exploits the availability of differentiable simulators by training a controller offline with gradient descent on the tracking error. We address training instabilities that frequently occur with APG through curriculum learning and experiment on a widely used controls benchmark, the CartPole, and two common aerial robots, a quadrotor and a fixed-wing drone. Our proposed method outperforms both model-based and model-free RL methods in terms of tracking error. Concurrently, it achieves similar performance to MPC while requiring more than an order of magnitude less computation time. Our work provides insights into the potential of APG as a promising control method for robotics. To facilitate the exploration of APG, we open-source our code and make it publicly available.

References

Tightly-coupled Fusion of Global Positional Measurements in Optimization-based Visual-Inertial Odometry

We are excited to release fully open-source our code to tightly fuse global positional measurements in visual-inertial odometry (VIO)! Motivated by the goal of achieving robust, drift-free pose estimation in long-term autonomous navigation, in this work we propose a methodology to fuse global positional information with visual and inertial measurements in a tightly-coupled nonlinear-optimization based estimator. Differently from previous works, which are loosely-coupled, the use of a tightly-coupled approach allows exploiting the correlations amongst all the measurements. A sliding window of the most recent system states is estimated by minimizing a cost function that includes visual re-projection errors, relative inertial errors, and global positional residuals. We use IMU preintegration to formulate the inertial residuals and leverage the outcome of such algorithm to efficiently compute the global position residuals. The experimental results show that the proposed method achieves accurate and globally consistent estimates, with negligible increase of the optimization computational cost. Our method consistently outperforms the loosely-coupled fusion approach. The mean position error is reduced up to 50% with respect to the loosely-coupled approach in outdoor Unmanned Aerial Vehicle (UAV) flights, where the global position information is given by noisy GPS measurements. To the best of our knowledge, this is the first work where global positional measurements are tightly fused in an optimization-based visual-inertial odometry algorithm, leveraging the IMU preintegration method to define the global positional factors.

References

Data-driven Feature Tracking for Event Cameras

Because of their high temporal resolution, increased resilience to motion blur, and very sparse output, event cameras have been shown to be ideal for low-latency and low-bandwidth feature tracking, even in challenging scenarios. Existing feature tracking methods for event cameras are either handcrafted or derived from first principles but require extensive parameter tuning, are sensitive to noise, and do not generalize to different scenarios due to unmodeled effects. To tackle these deficiencies, we introduce the first data-driven feature tracker for event cameras, which leverages low-latency events to track features detected in a grayscale frame. We achieve robust performance via a novel frame attention module, which shares information across feature tracks. By directly transferring zero-shot from synthetic to real data, our data-driven tracker outperforms existing approaches in relative feature age by up to 120 % while also achieving the lowest latency. This performance gap is further increased to 130 % by adapting our tracker to real data with a novel self-supervision strategy.

References

Event-based Shape from Polarization

State-of-the-art solutions for Shape-from-Polarization (SfP) suffer from a speed-resolution tradeoff: they either sacrifice the number of polarization angles measured or necessitate lengthy acquisition times due to framerate constraints, thus compromising either accuracy or latency. We tackle this tradeoff using event cameras. Event cameras operate at microseconds resolution with negligible motion blur, and output a continuous stream of events that precisely measures how light changes over time asynchronously. We propose a setup that consists of a linear polarizer rotating at high-speeds in front of an event camera. Our method uses the continuous event stream caused by the rotation to reconstruct relative intensities at multiple polarizer angles. Experiments demonstrate that our method outperforms physics-based baselines using frames, reducing the MAE by 25% in synthetic and real-world dataset. In the real world, we observe, however, that the challenging conditions (i.e., when few events are generated) harm the performance of physics-based solutions. To overcome this, we propose a learning-based approach that learns to estimate surface normals even at low event-rates, improving the physics-based approach by 52% on the real world dataset. The proposed system achieves an acquisition speed equivalent to 50 fps (>twice the framerate of the commercial polarization sensor) while retaining the spatial resolution of 1MP. Our evaluation is based on the first large-scale dataset for event-based SfP.

References

Event-based Shape from Polarization

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

Event-based Agile Object Catching with a Quadrupedal Robot

Quadrupedal robots are conquering various applications in indoor and outdoor environments due to their capability to navigate challenging uneven terrains. Exteroceptive information greatly enhances this capability since perceiving their surroundings allows them to adapt their controller and thus achieve higher levels of robustness. However, sensors such as LiDARs and RGB cameras do not provide sufficient information to quickly and precisely react in a highly dynamic environment since they suffer from a bandwidth-latency tradeoff. They require significant bandwidth at high frame rates while featuring significant perceptual latency at lower frame rates, thereby limiting their versatility on resource constrained platforms. In this work, we tackle this problem by equipping our quadruped with an event camera, which does not suffer from this tradeoff due to its asynchronous and sparse operation. In levering the low latency of the events, we push the limits of quadruped agility and demonstrating high-speed ball catching with a net for the first time. We show that our quadruped equipped with an event-camera can catch objects at maximum speeds of 15 m/s from 4 meters, with a success rate of 83%. With a VGA event camera, our method runs at 100 Hz on an NVIDIA Jetson Orin.

References

Learned Inertial Odometry for Autonomous Drone Racing

Inertial odometry is an attractive solution to the problem of state estimation for agile quadrotor flight. It is inexpensive, lightweight, and it is not affected by perceptual degradation. However, only relying on the integration of the inertial measurements for state estimation is infeasible. The errors and time-varying biases present in such measurements cause the accumulation of large drift in the pose estimates. Recently, inertial odometry has made significant progress in estimating the motion of pedestrians. State-of-the-art algorithms rely on learning a motion prior that is typical of humans but cannot be transferred to drones. In this work, we propose a learning-based odometry algorithm that uses an inertial measurement unit (IMU) as the only sensor modality for autonomous drone racing tasks. The core idea of our system is to couple a model-based filter, driven by the inertial measurements, with a learning-based module that has access to the thrust measurements. We show that our inertial odometry algorithm is superior to the state-of-the-art filter-based and optimization-based visual-inertial odometry as well as the state-of-the-art learned-inertial odometry in estimating the pose of an autonomous racing drone. Additionally, we show that our system is comparable to a visual-inertial odometry solution that uses a camera and exploits the known gate location and appearance. We believe that the application in autonomous drone racing paves the way for novel research in inertial odometry for agile quadrotor flight. We release the code open-source.

References

Learned Inertial Odometry for Autonomous Drone Racing

IEEE Robotics and Automation Letters (RA-L), 2023.

Agilicious: Open-Source and Open-Hardware Agile Quadrotor for Vision-Based Flight

We are excited to present Agilicious, a co-designed hardware and software framework tailored to autonomous, agile quadrotor flight. It is completely open-source and open-hardware and supports both model-based and neural-network-based controllers. Also, it provides high thrust-to-weight and torque-to-inertia ratios for agility, onboard vision sensors, GPU-accelerated compute hardware for real-time perception and neural-network inference, a real-time flight controller, and a versatile software stack. In contrast to existing frameworks, Agilicious offers a unique combination of flexible software stack and high-performance hardware. We compare Agilicious with prior works and demonstrate it on different agile tasks, using both modelbased and neural-network-based controllers. Our demonstrators include trajectory tracking at up to 5 g and 70 km/h in a motion-capture system, and vision-based acrobatic flight and obstacle avoidance in both structured and unstructured environments using solely onboard perception. Finally, we demonstrate its use for hardware-in-the-loop simulation in virtual-reality environments. Thanks to its versatility, we believe that Agilicious supports the next generation of scientific and industrial quadrotor research. For more details check our paper, video and webpage.

References

Event-based Vision meets Deep Learning on Steering Prediction for Self-driving Cars

Event cameras are bio-inspired vision sensors that naturally capture the dynamics of a scene, filtering out redundant information. This paper presents a deep neural network approach that unlocks the potential of event cameras on a challenging motion-estimation task: prediction of a vehicle's steering angle. To make the best out of this sensor-algorithm combination, we adapt state-of-the-art convolutional architectures to the output of event sensors and extensively evaluate the performance of our approach on a publicly available large scale event-camera dataset (~1000 km). We present qualitative and quantitative explanations of why event cameras allow robust steering prediction even in cases where traditional cameras fail, e.g. challenging illumination conditions and fast motion. Finally, we demonstrate the advantages of leveraging transfer learning from traditional to event-based vision, and show that our approach outperforms state-of-the-art algorithms based on standard cameras.

References

Data-Efficient Collaborative Decentralized Thermal-Inertial Odometry

We propose a system solution to achieve data-efficient, decentralized state estimation for a team of flying robots using thermal images and inertial measurements. Each robot can fly independently, and exchange data when possible to refine its state estimate. Our system front-end applies an online photometric calibration to refine the thermal images so as to enhance feature tracking and place recognition. Our system back-end uses a covariance intersection fusion strategy to neglect the cross-correlation between agents so as to lower memory usage and computational cost. The communication pipeline uses Vector of Locally Aggregated Descriptors (VLAD) to construct a request-response policy that requires low bandwidth usage. We test our collaborative method on both synthetic and real-world data. Our results show that the proposed method improves by up to 46% trajectory estimation with respect to an individual-agent approach, while reducing up to 89% the communication exchange. Datasets and code are released to the public, extending the already-public JPL xVIO library.

References

Data-Efficient Collaborative Decentralized Thermal-Inertial Odometry

IEEE Robotics and Automation Letters (RA-L), 2022

The Hilti SLAM Challenge Dataset

We release the Hilti SLAM Challenge Dataset! The sensor platform used to collect this dataset contains a number of visual, lidar and inertial sensors which have all been rigorously calibrated. All data is temporally aligned to support precise multi-sensor fusion. Each dataset includes accurate ground truth to allow direct testing of SLAM results. Raw data as well as intrinsic and extrinsic sensor calibration data from twelve datasets in various environments is provided. Each environment represents common scenarios found in building construction sites in various stages of completion. For more details, check out our paper.

References

ESS: Learning Event-based Semantic Segmentation from Still Images

References

Exploring Event Camera-based Odometry for Planetary Robots

References

Exploring Event Camera-based Odometry for Planetary Robots

Robotics and Automation Letters (RAL), 2022

Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High Speed Scenarios

In this paper, we present the first state estimation pipeline that leverages the complementary advantages of a standard camera with an event camera by fusing in a tightly-coupled manner events, standard frames, and inertial measurements. We show on the Event Camera Dataset that our hybrid pipeline leads to an accuracy improvement of 130% over event-only pipelines, and 85% over standard-frames only visual-inertial systems, while still being computationally tractable.

Furthermore, we use our pipeline to demonstrate - to the best of our knowledge - the first autonomous quadrotor flight using an event camera for state estimation, unlocking flight scenarios that were not reachable with traditional visual inertial odometry, such as low-light environments and high dynamic range scenes.

References

Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High Speed Scenarios

IEEE Robotics and Automation Letters (RA-L), 2018.

PDF YouTube ICRA18 Video Pitch Poster Results (raw trajectories) Project Webpage Source Code

Event-aided Direct Sparse Odometry

We introduce EDS, a direct monocular visual odometry using events and frames. Our algorithm leverages the event generation model to track the camera motion in the blind time between frames. The method formulates a direct probabilistic approach of observed brightness increments. Per-pixel brightness increments are predicted using a sparse number of selected 3D points and are compared to the events via the brightness increment error to estimate camera motion. The method recovers a semi-dense 3D map using photometric bundle adjustment. EDS is the first method to perform 6-DOF VO using events and frames with a direct approach. By design it overcomes the problem of changing appearance in indirect methods. We also show that, for a target error performance, EDS can work at lower frame rates than state-of-the-art frame-based VO solutions. This opens the door to low-power motion-tracking applications where frames are sparingly triggered "on demand'' and our method tracks the motion in between. We release code and datasets to the public.

References

Time Lens++: Event-based Frame Interpolation with Parametric Non-linear Flow and Multi-scale Fusion

References

Time Lens++: Event-based Frame Interpolation with Parametric Non-linear Flow and Multi-scale Fusion

IEEE Conference of Computer Vision and Pattern Recognition (CVPR), 2022, New Orleans, USA.

AEGNN: Asynchronous Event-based Graph Neural Networks

References

AEGNN: Asynchronous Event-based Graph Neural Networks

IEEE Conference of Computer Vision and Pattern Recognition (CVPR), 2022, New Orleans, USA.

Visual Attention Prediction Improves Performance of Autonomous Drone Racing Agents

Humans race drones faster than neural networks trained for end-to-end autonomous flight. This may be related to the ability of human pilots to select task-relevant visual information effectively. This work investigates whether neural networks capable of imitating human eye gaze behavior and attention can improve neural network performance for the challenging task of vision-based autonomous drone racing. We hypothesize that gaze-based attention prediction can be an efficient mechanism for visual information selection and decision making in a simulator-based drone racing task. We test this hypothesis using eye gaze and flight trajectory data from 18 human drone pilots to train a visual attention prediction model. We then use this visual attention prediction model to train an end-to-end controller for vision-based autonomous drone racing using imitation learning. We compare the drone racing performance of the attention-prediction controller to those using raw image inputs and image-based abstractions (i.e., feature tracks). Comparing success rates for completing a challenging race track by autonomous flight, our results show that the attention-prediction based controller (88% success rate) outperforms the RGB-image (61% success rate) and feature-tracks (55% success rate) controller baselines. Furthermore, visual attention-prediction and feature-track based models showed better generalization performance than image-based models when evaluated on hold-out reference trajectories. Our results demonstrate that human visual attention prediction improves the performance of autonomous vision-based drone racing agents and provides an essential step towards vision-based, fast, and agile autonomous flight that eventually can reach and even exceed human performances.

References

Minimum-Time Quadrotor Waypoint Flight in Cluttered Environments

Planning minimum-time trajectories in cluttered environments with obstacles is a challenging problem. The quadrotor has to fly on the edge of its capabilities and, at the same time, avoid obstacles. However, planning such trajectories is vital for applications like search and rescue, where after disasters, it is essential to search for survivors as quickly as possible. Nevertheless, planning minimum-time trajectories in cluttered environments has not been addressed before in its entirety, using the full quadrotor model that can leverage the full actuation of the platform. We address this problem by using a hierarchical, sampling-based method with an incrementally more complex quadrotor model. The proposed method outperforms all related baselines in cluttered environments and is further validated in real-world flights at over 60km/h.

References

Continuous-Time vs. Discrete-Time Vision-based SLAM: A Comparative Study

References

Bridging the Gap between Events and Frames through Unsupervised Domain Adaptation

Event cameras are novel sensors with outstanding properties such as high temporal resolution and high dynamic range. Despite these characteristics, event-based vision has been held back by the shortage of labeled datasets due to the novelty of event cameras. To overcome this drawback, we propose a task transfer method that allows models to be trained directly with labeled images and unlabeled event data. Compared to previous approaches, (i) our method transfers from single images to events instead of high frame rate videos, and (ii) does not rely on paired sensor data. To achieve this, we leverage the generative event model to split event features into content and motion features. This feature split enables to efficiently match the latent space for events and images, which is crucial for a successful task transfer. Thus, our approach unlocks the vast amount of existing image datasets for the training of event-based neural networks. Our task transfer method consistently outperforms methods applicable in the Unsupervised Domain Adaptation setting for object detection by 0.26 mAP (increase by 93%) and classification by 2.7% accuracy.

References

Perception-Aware Perching on Powerlines with Multirotors

Multirotor aerial robots are becoming widely used for the inspection of powerlines. To enable continuous, robust inspection without human intervention, the robots must be able to perch on the powerlines to recharge their batteries. Highly versatile perching capabilities are necessary to adapt to the variety of configurations and constraints that are present in real powerline systems. This paper presents a novel perching trajectory generation framework that computes perception-aware, collision-free, and dynamically-feasible maneuvers to guide the robot to the desired final state. Trajectory generation is achieved via solving a Nonlinear Programming problem using the Primal-Dual Interior Point method. The problem considers the full dynamic model of the robot down to its single rotor thrusts and minimizes the final pose and velocity errors while avoiding collisions and maximizing the visibility of the powerline during the maneuver. The generated maneuvers consider both the perching and the posterior recovery trajectories. The framework adopts costs and constraints defined by efficient mathematical representations of powerlines, enabling online onboard execution in resource-constrained hardware. The method is validated on-board an agile quadrotor conducting powerline inspection and various perching maneuvers with final pitch values of up to 180 degrees.

References

Policy Search for Model Predictive Control with Application to Agile Drone Flight

Policy Search and Model Predictive Control (MPC) are two different paradigms for robot control: policy search has the strength of automatically learning complex policies using experienced data, while MPC can offer optimal control performance using models and trajectory optimization. An open research question is how to leverage and combine the advantages of both approaches. In this work, we provide an answer by using policy search for automatically choosing high-level decision variables for MPC, which leads to a novel policy-search-for-model-predictive-control framework. Specifically, we formulate the MPC as a parameterized controller, where the hard-to-optimize decision variables are represented as high-level policies. Such a formulation allows optimizing policies in a self-supervised fashion. We validate this framework by focusing on a challenging problem in agile drone flight: flying a quadrotor through fast-moving gates. Experiments show that our controller achieves robust and real-time control performance in both simulation and the real world. The proposed framework offers a new perspective for merging learning and control.

References

Policy Search for Model Predictive Control with Application to Agile Drone Flight

IEEE Transactions on Robotics (T-RO), 2022.

SVO Pro

We are excited to release fully open source SVO Pro! SVO Pro is the latest version of SVO developed over the past few years in our lab. SVO Pro features the support of different camera models, active exposure control, a sliding window based backend, and global bundle adjustment with loop closure. Check out the project page and the code on github!

References

ESL: Event-based Structured Light

References

ESL: Event-based Structured Light

International Conference on 3D Vision (3DV), 2021.

E-RAFT: Dense Optical Flow from Event Cameras

We propose to incorporate feature correlation and sequential processing into dense optical flow estimation from event cameras. Modern frame-based optical flow methods heavily rely on matching costs computed from feature correlation. In contrast, there exists no optical flow method for event cameras that explicitly computes matching costs. Instead, learning-based approaches using events usually resort to the U-Net architecture to estimate optical flow sparsely. Our key finding is that introducing correlation features significantly improves results compared to previous methods that solely rely on convolution layers. Compared to the state-of-the-art, our proposed approach computes dense optical flow and reduces the end-point error by 23% on MVSEC. Furthermore, we show that all existing optical flow methods developed so far for event cameras have been evaluated on datasets with very small displacement fields with a maximum flow magnitude of 10 pixels. We introduce a new real-world dataset that exhibits displacement fields with magnitudes up to 210 pixels and 3 times higher camera resolution based on this observation. Our proposed approach reduces the end-point error on this dataset by 66%.

References

Learning High-Speed Flight in the Wild

This is the algorithm presented in our Science Robotics paper Learning High-Speed Flight in the Wild. Check out the code here. The code allows you to train end-to-end navigation policies to fly drones in previously unknown, challenging environments (snowy terrains, derailed trains, ruins, thick vegetation, and collapsed buildings), with only onboard sensing and computation. For more details, check out our paper.

References

Learning High-Speed Flight in the Wild

Science Robotics, 2021.

Time-Optimal Planning for Quadrotor Waypoint Flight

This is the planning algorithm presented in our Science Robotics paper Time-Optimal Planning for Quadrotor Waypoint Flight. Check out the code here, it comes with a simple example! This is the first method to allow planning time-optimal trajectories at the boundary of the performance envelope, correctly accounting for the single rotor limits of a quadrotor vehicle. For more details, check out our paper.

References

Powerline Tracking with Event Cameras

We release the event-based line tracker algorithm from our IROS paper Powerline Tracking with Event Cameras. Check out the code here! Our algorithm identifies lines in the stream of events by detecting planes in the spatio-temporal signal, and tracks them throughtime. The implementation runs onboard resource constrained quadrotors and is capable of detecting multiple distinct lines in real time with rates of up to 320 thousand events per second. The tracker is able to persistently track the powerlines, with a mean lifetime of the line 10x longer than existing approaches. For more details, check out our paper.

References

EVO: Event-based, 6-DOF Parallel Tracking and Mapping in Real-Time

We release EVO, an Event-based Visual Odometry algorithm from our RA-L paper EVO: Event-based, 6-DOF Parallel Tracking and Mapping in Real-Time. The code is implemented in C++ and runs in real-time on a laptop. Try it out for yourself on GitHub! Our algorithm successfully leverages the outstanding properties of event cameras to track fast camera motions while recovering a semi-dense 3D map of the environment. The implementation outputs up to several hundred pose estimates per second. Due to the nature of event cameras, our algorithm is unaffected by motion blur and operates very well in challenging, high dynamic range conditions with strong illumination changes.

References

NeuroBEM: Hybrid Aerodynamic Quadrotor Model

We release the full dataset associated with our upcoming RSS paper NeuroBEM: Hybrid Aerodynamic Quadrotor Model. The dataset features over 1h15min of highly aggressive maneuvers recorded at high accuracy in one of the worlds largest optical tracking volumes. We provide time-aligned quadrotor state and motor-commands recorded at 400Hz in a curated dataset. For more details, check out our paper and dataset.

References

GPU-Accelerated Frontend for High-Speed VIO now as ROS node

The recent introduction of powerful embedded GPUs has enabled algorithms to run well above the standard video rates, yielding higher information processing capability and reduced latency. This code introduces an enhanced FAST feature detector that applies a GPU-specific non-maxima suppression, imposes spatial feature distribution and extracts features simultaneously. It comes as a ROS node, runs on most modern NVIDIA CUDA-capable GPUS, but is further specialized with the Jetson TX2 platform in mind, on which it performs more than 1000fps throughput.

References

Faster than FAST: GPU-Accelerated Frontend for High-Speed VIO

IEEE International Conference on Intelligent Robots and Systems, 2020.

TimeLens: Event-based Video Frame Interpolation

References

TimeLens: Event-based Video Frame Interpolation

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, 2021.

How to Calibrate Your Event Camera

References

AutoTune: Controller Tuning for High-Speed Flight

The following code allows you to automatically tune your controller on the task of high speed flight, where our approach obtains superior performance than the state of the art. In contrast to previous work, our algorithm does not assume any prior knowledge of the drone or of the optimization function and can deal with the multi-modal characteristics of the parameters' optimization space.

We propose AutoTune, a sampling method based on Metropolis-Hasting sampling that can tune controller to fly faster than ever before. Among others, AutoTune improves tracking error when flying a physical platform with respect to parameters tuned by a human expert.

References

DSEC: A Stereo Event Camera Dataset for Driving Scenarios

References

DSEC: A Stereo Event Camera Dataset for Driving Scenarios

IEEE Robotics and Automation Letters (RA-L), 2021.

PDF Project Page and Dataset Code Teaser ICRA 2021 Video Pitch Slides

Human-Piloted Drone Racing: Visual Processing and Control

Humans race drones faster than algorithms, despite being limited to a fixed camera angle, body rate control, and response latencies in the order of hundreds of milliseconds. A better understanding of the ability of human pilots of selecting appropriate motor commands from highly dynamic visual information may provide key insights for solving current challenges in vision-based autonomous navigation. This paper investigates the relationship between human eye movements, control behavior, and flight performance in a drone racing task. We collected a multimodal dataset from 21 experienced drone pilots using a highly realistic drone racing simulator, also used to recruit professional pilots. Our results show task-specific improvements in drone racing performance over time. In particular, we found that eye gaze tracks future waypoints (i.e., gates), with first fixations occurring on average 1.5 seconds and 16 meters before reaching the gate. Moreover, human pilots consistently looked at the inside of the future flight path for lateral (i.e., left and right turns) and vertical maneuvers (i.e., ascending and descending). Finally, we found a strong correlation between pilots eye movements and the commanded direction of quadrotor flight, with an average visual-motor response latency of 220 ms. These results highlight the importance of coordinated eye movements in human-piloted drone racing. We make our dataset publicly available.

References

ESIM: an Open Event Camera Simulator now with GPU support!

Event cameras are revolutionary sensors that work radically differently from standard cameras. Instead of capturing intensity images at a fixed rate, event cameras measure changes of intensity asynchronously, in the form of a stream of events, which encode per-pixel brightness changes. In the last few years, their outstanding properties (asynchronous sensing, no motion blur, high dynamic range) have led to exciting vision applications, with very low-latency and high robustness.

We present ESIM: an efficient event camera simulator implemented in C++ and available open source now with additional python bindings and GPU support! ESIM can simulate arbitrary camera motion in 3D scenes, while providing events, standard images, inertial measurements, with full ground truth information including camera pose, velocity, as well as depth and optical flow maps.

References

ESIM: an Open Event Camera Simulator

Conference on Robot Learning (CoRL), Zurich, 2018.

Combining Events and Frames using Recurrent Asynchronous Multimodal Networks for Monocular Depth Prediction

Event cameras are novel vision sensors that report per-pixel brightness changes as a stream of asynchronous "events". They offer significant advantages compared to standard cameras due to their high temporal resolution, high dynamic range and lack of motion blur. However, events only measure the varying component of the visual signal, which limits their ability to encode scene context. By contrast, standard cameras measure absolute intensity frames, which capture a much richer representation of the scene. Both sensors are thus complementary. However, due to the asynchronous nature of events, combining them with synchronous images remains challenging, especially for learning-based methods. This is because traditional recurrent neural networks (RNNs) are not designed for asynchronous and irregular data from additional sensors. To address this challenge, we introduce Recurrent Asynchronous Multimodal (RAM) networks, which generalize traditional RNNs to handle asynchronous and irregular data from multiple sensors. Inspired by traditional RNNs, RAM networks maintain a hidden state that is updated asynchronously and can be queried at any time to generate a prediction. We apply this novel architecture to monocular depth estimation with events and frames where we show an improvement over state-of-the-art methods by up to 30\% in terms of mean absolute depth error. To enable further research on multimodal learning with events, we release EventScape, a new dataset with events, intensity frames, semantic labels, and depth maps recorded in the CARLA simulator.

References

Combining Events and Frames using Recurrent Asynchronous Multimodal Networks for Monocular Depth Prediction

IEEE Robotics and Automation Letters (RA-L), 2021.

Data-Driven MPC for Quadrotors

Aerodynamic forces render accurate high-speed trajectory tracking with quadrotors extremely challenging. These complex aerodynamic effects become a significant disturbance at high speeds, introducing large positional tracking errors, and are extremely difficult to model. To fly at high speeds, feedback control must be able to account for these aerodynamic effects in real-time. This necessitates a modelling procedure that is both accurate and efficient to evaluate. Therefore, we present an approach to model aerodynamic effects using Gaussian Processes, which we incorporate into a Model Predictive Controller to achieve efficient and precise real-time feedback control, leading to up to 70% reduction in trajectory tracking error at high speeds. We verify our method by extensive comparison to a state-of-the-art linear drag model in synthetic and real-world experiments at speeds of up to 14m/s and accelerations beyond 4g.

References

Autonomous Quadrotor Flight despite Rotor Failure with Onboard Vision Sensors

You can check out our source code of a fault-tolerant flight controller to control a quadrotor after motor failure, using the nonlinear dynamic inversion approach. You can test our control algorithm in a simulator or real flights. The source code includes a vision-based state estimator for pose estimate, despite the quadrotor fast spins at over 20 rad/s. We also release data logged from an onboard camera together with the IMU measurements.

References

Autonomous Quadrotor Flight despite Rotor Failure with Onboard Vision Sensors: Frames vs.

Events

and View Synthesis

IEEE Robotics and Automation Letters (RA-L), 2021.

Reference Pose Verification and Generation for Visual Localization

High quality datasets with accurate 6 Degree-of-Freedom (DoF) reference poses are the foundation for benchmarking and improving existing visual localization methods. While it is not a trivial task (e.g., images may be taken under drastically different conditions), there is little work focusing on the generation of reference poses. By making use of learned local features and view synthesis, we propose a framework to verify/refine the reference poses of existing datasets and generate new reference poses. Using our framework, we greatly improve the reference pose accuracy of the popular Aachen Day-Night dataset and extend the dataset with new nighttime imags. The new dataset, namely the Aachen Day-Night v1.1 dataset, has been integrated into the online visual localization benchmarking service

Aachen Day-Night dataset v1.1 on the Visual Localization Benchmark

References

Reference Pose Generation for Long-term Visual Localization via Learned Features

and View Synthesis

International Journal of Computer Vision (IJCV), 2020.

Reducing the Sim-to-Real Gap for Event Cameras