SVO Pro: Semi-direct Visual-Inertial Odometry and SLAM

for Monocular, Stereo, and Wide Angle Cameras

Code

GitHub repository

This repo includes SVO Pro which is the newest version of Semi-direct Visual Odometry (SVO) developed over the past few years in our lab.

Description

SVO Pro extends the previous SVO version (IEEE TRO'17). SVO Pro features the support of different camera models, active exposure control, a sliding window based backend, and global bundle adjustment with loop closure.

What is SVO? SVO uses a semi-drect paradigm to estimate the 6-DOF motion of a camera system from both pixel intensities (direct) and features (without the necessity for time-consuming feature extraction and matching procedures), while achieving better accuracy by directly using the pixel intensities.

What does SVO Pro include? SVO Pro offers the following functionalities:

- Visual-odometry: The most recent version of SVO that supports perspective and fisheye/catadioptric cameras in monocular or stereo setup. It also includes active exposure control.

- Visual-inertial odometry: SVO fronted + visual-inertial sliding window optimization backend (modified from OKVIS)

- Visual-inertial SLAM: SVO frontend + visual-inertial sliding window optimization backend + globally bundle adjusted map (using iSAM2). The global map is updated in real-time, thanks to iSAM2, and used for localization at frame-rate.

- Visual-inertial SLAM with loop closure: Loop closures, via DBoW2, are integrated in the global bundle adjustment. Pose graph optimization is also included as a lightweight replacement of the global bundle adjustment.

SVO is both versatile and efficient. First, it works on different types of cameras, from common projective cameras to catadioptric ones. It also supports stereo and multiple cameras. Therefore it can be tailored for different scenarios. Second, SVO requires very little computational resource compared to most of the existing algorithms. It can reach up to 400 frames per second on an i7 processor (while taking less than 2 cores!) and up to 100 fps on a smartphone processor (e.g., Odroid XU4).

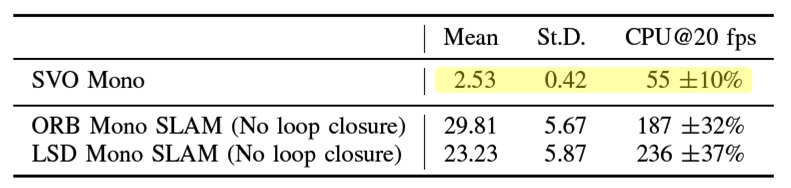

Processing time in milliseconds on a laptop with an Intel Core i7 (2.80 GHz) processor.

(For more details about the performance of SVO 2.0, please refer to the SVO 2.0 paper: IEEE TRO'17).

Due to its flexibility and efficiency, SVO has been successfully used since 2014 in a variety of applications, including state estimation for micro aerial vehicles, automotive, and virtual reality applications. In all these applications, SVO ran fully onboard! You can find a non-comprehensive list below.

Autonomous Drone Navigation

|

20 m/s flight on DARPA FLA drone |

Fast autonomous flight |

|---|---|

|

Autonomous landing |

Automatic recovery after tossing drone in the air |

|

Selfie drone in 3D |

Aerial-ground collaboration |

|

Search and rescue |

On-the-spot training |

|

Air-ground localization |

Collaborative Visual SLAM (up to 3 drones) |

Automotive

|

Car with four cameras |

ZurichEye (lab spinoff) |

|---|

3D Scanning

|

Dense reconstruction |

Flying 3D scanner |

|---|

Commercial Applications

|

|

Inside-out tracking with SVO + IMU on an iPhone (Dacuda) |

|---|---|

|

Room-scale VR with a smartphone (Dacuda) |

Automotive car navigation (lab spinoff ZurichEye, now Facebook-Oculus VR Zurich) |

References

SVO: Semi-Direct Visual Odometry for Monocular and Multi-Camera Systems

IEEE Transactions on Robotics, Vol. 33, Issue 2, pages 249-265, Apr. 2017.

Includes comparison against ORB-SLAM, LSD-SLAM, and DSO and comparison among Dense, Semi-dense, and Sparse Direct Image Alignment.

SVO: Fast Semi-Direct Monocular Visual Odometry

IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014.

REMODE: Probabilistic, Monocular Dense Reconstruction in Real Time

IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014.

Acknowledgements

This development of SVO was funded by the Swiss National Foundation (project number 200021-143607, Swarm of Flying Cameras), the National Center of Competence in Research Robotics (NCCR), the UZH Forschungskredit, and the SNSF-ERC Starting Grant.