Event-based, Direct Camera Tracking

Paper:

Samuel Bryner, Guillermo Gallego, Henri Rebecq, Davide ScaramuzzaEvent-based, Direct Camera Tracking from a Photometric 3D Map using Nonlinear Optimization,

IEEE International Conference on Robotics and Automation (ICRA), 2019.

Source code:

direct_event_camera_trackerVideo:

Poster:

Poster used for presentation at ICRA 2019.Master Thesis:

MS Thesis and presentation, 2018.Datasets:

Citation

If you use these data, please cite this publication as follows:@InProceedings{Bryner19icra, author = {Samuel Bryner and Guillermo Gallego and Henri Rebecq and Davide Scaramuzza}, title = {Event-based, Direct Camera Tracking from a Photometric {3D} Map using Nonlinear Optimization}, booktitle = {{IEEE} Int. Conf. Robot. Autom. (ICRA)}, year = 2019 } @Article{Gallego18pami, author = {Guillermo Gallego and Jon E. A. Lund and Elias Mueggler and Henri Rebecq and Tobi Delbruck and Davide Scaramuzza}, title = {Event-based, 6-{DOF} Camera Tracking from Photometric Depth Maps}, journal = {{IEEE} Trans. Pattern Anal. Mach. Intell.}, year = 2018, volume = 40, number = 10, pages = {2402--2412}, month = oct, doi = {10.1109/TPAMI.2017.2769655} }

Description

These are the datasets used in the ICRA'19 paper "Event-based, Direct Camera Tracking from a Photometric 3D Map using Nonlinear Optimization". Every dataset consists of one or more trajectories of an event camera (stored as a rosbag) and corresponding photometric map in the form of a point cloud for real data and a textured mesh for simulated scenes. All datasets contain ground truth provided by a motion capture system (for indoor recordings), SVO (for outdoor ones) or the simulator itself.The respective calibration data is provided as well (both the raw data used for calibration as well as the resulting intrinsic and extrinsic parameters).

The rosbag files contain the events using dvs_msgs/EventArray message types. The images, camera calibration, and IMU measurements use the standard sensor_msgs/Image, sensor_msgs/CameraInfo, and sensor_msgs/Imu message types, respectively. Ground truth poses are provided as geometry_msgs/PoseStamped message type.

Scenes

|

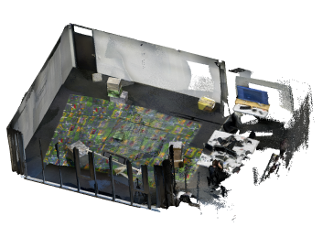

RoomThe main sequence used in our paper. This is a 3D scan of one of the rooms in our lab, made with an ASUS XtionPro RGB-Depth-Camera and ElasticFusion.Trajectories

Point cloud created using RGB-D-SLAM (ElasticFusion) |

|

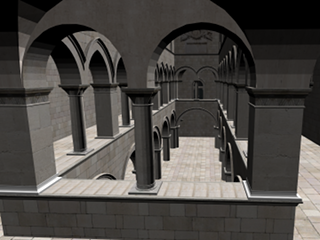

AtriumAtrium of the Sponza Palace in Dubrovnik, Croatia.Simulated using the event camera simulator. Trajectories Map Source: http://hdri.cgtechniques.com/~sponza/files/ (Copyright by Marko Dabrovic / cgtechniques.com 2002) |

|

Toy RoomSimulated scene consisting of a few textured boxes on a floor.Simulated using the event camera simulator. Trajectories

|

|

IEEE Trans. PAMI 2018These are sequences used in the paper:G. Gallego, J. E. A. Lund, E. Mueggler, H. Rebecq, T. Delbruck, D. Scaramuzza. "Event-based, 6-DOF Camera Tracking from Photometric Depth Maps." IEEE Trans. Pattern Anal. Mach. Intell., 40(10):2402-2412, Oct. 2018. We provide three different scenes: an indoor one (with a some textured boxes) and two outdoor scenes (a small creek with a pipe and a bicycle stand). The maps are included in the ROS bags as they are just depth from a Intel R200 structured light sensor. Ground truth poses are provided by a motion capture system (in the case of indoor scenes) or by SVO (in the case of outdoor scenes). Trajectories

|