Vision based Navigation for Micro Aerial Vehicles (MAVs)

We design smart computer-vision algorithms that allow small flying robots to fly all by themselves, without any user intervention. Our flying robots only use onboard cameras and inertial sensors to "see" the world and "orientate" themselves. No GPS, no laser, no external positioning systems (e.g., Vicon), no CGI are used in these videos. Our driving motivations are search-and-rescue and remote-inspection scenarios. For example, quadrotors have the great potential to enter and rapidly explore damaged buildings in search of survivors after a catastrophe. Alternatively, they can be used in all remote-inspection operations that fall beyond the line of sight with the operator. For all these operations, it is crucial that they can navigate all by themselves.

Bootstrapping Reinforcement Learning with Imitation for Vision-Based Agile Flight

We combine the effectiveness of Reinforcement Learning (RL) and the efficiency of Imitation Learning (IL) in the context of vision-based, autonomous drone racing. We focus on directly processing visual input without explicit state estimation. While RL offers a general framework for learning complex controllers through trial and error, it faces challenges regarding sample efficiency and computational demands due to the high dimensionality of visual inputs. Conversely, IL demonstrates efficiency in learning from visual demonstrations but is limited by the quality of those demonstrations and faces issues like covariate shift. To overcome these limitations, we propose a novel training framework combining RL and IL advantages. Our framework involves three stages: (i) initial training of a teacher policy using privileged state information, (ii) distilling this policy into a student policy using IL, (iii) performance-constrained adaptive RL fine-tuning. Our experiments in both simulated and real-world environments demonstrate that our approach achieves superior performance and robustness than IL or RL alone in navigating a quadrotor through a racing course using only visual information without explicit state estimation.

References

Demonstrating Agile Flight from Pixels without State Estimation

We present the first vision-based quadrotor system that autonomously navigates through a sequence of gates at high speeds while directly mapping pixels to control commands. Like professional drone-racing pilots, our system does not use explicit state estimation and leverages the same control commands humans use (collective thrust and body rates). We demonstrate agile flight at speeds up to 40km/h with accelerations up to 2g. This is achieved by training vision-based policies with reinforcement learning (RL). The training is facilitated using an asymmetric actor-critic with access to privileged information. To overcome the computational complexity during image-based RL training, we use the inner edges of the gates as a sensor abstraction. Our approach enables autonomous agile flight with standard, off-the-shelf hardware.

References

Contrastive Learning for Enhancing Robust Scene Transfer in Vision-based Agile Flight

Scene transfer for vision-based mobile robotics applications is a highly relevant and challenging problem. The utility of a robot greatly depends on its ability to perform a task in the real world, outside of a well-controlled lab environment. Existing scene transfer end-to-end policy learning approaches often suffer from poor sample efficiency or limited generalization capabilities, making them unsuitable for mobile robotics applications. This work proposes an adaptive multi- pair contrastive learning strategy for visual representation learning that enables zero-shot scene transfer and real-world deployment. Control policies relying on the embedding are able to operate in unseen environments without the need for finetuning in the deployment environment. We demonstrate the performance of our approach on the task of agile, vision-based quadrotor flight. Extensive simulation and real-world experi- ments demonstrate that our approach successfully generalizes beyond the training domain and outperforms all baselines.

References

AERIAL-CORE: AI-Powered Aerial Robots for Inspection and Maintenance of Electrical Power Infrastructures

Large-scale infrastructures are prone to deterioration due to age, environmental influences, and heavy usage. Ensuring their safety through regular inspections and maintenance is crucial to prevent incidents that can significantly affect public safety and the environment. This is especially pertinent in the context of electrical power networks, which, while essential for energy provision, can also be sources of forest fires. Intelligent drones have the potential to revolutionize inspection and maintenance, eliminating the risks for human operators, increasing productivity, reducing inspection time, and improving data collection quality. However, most of the current methods and technologies in aerial robotics have been trialed primarily in indoor testbeds or outdoor settings under strictly controlled conditions, always within the line of sight of human operators. Additionally, these methods and technologies have typically been evaluated in isolation, lacking comprehensive integration. This paper introduces the first autonomous system that combines various innovative aerial robots. This system is designed for extended-range inspections beyond the visual line of sight, features aerial manipulators for maintenance tasks, and includes support mechanisms for human operators working at elevated heights. The paper further discusses the successful validation of this system on numerous electrical power lines, with aerial robots executing flights over 10 kilometers away from their ground control stations.

References

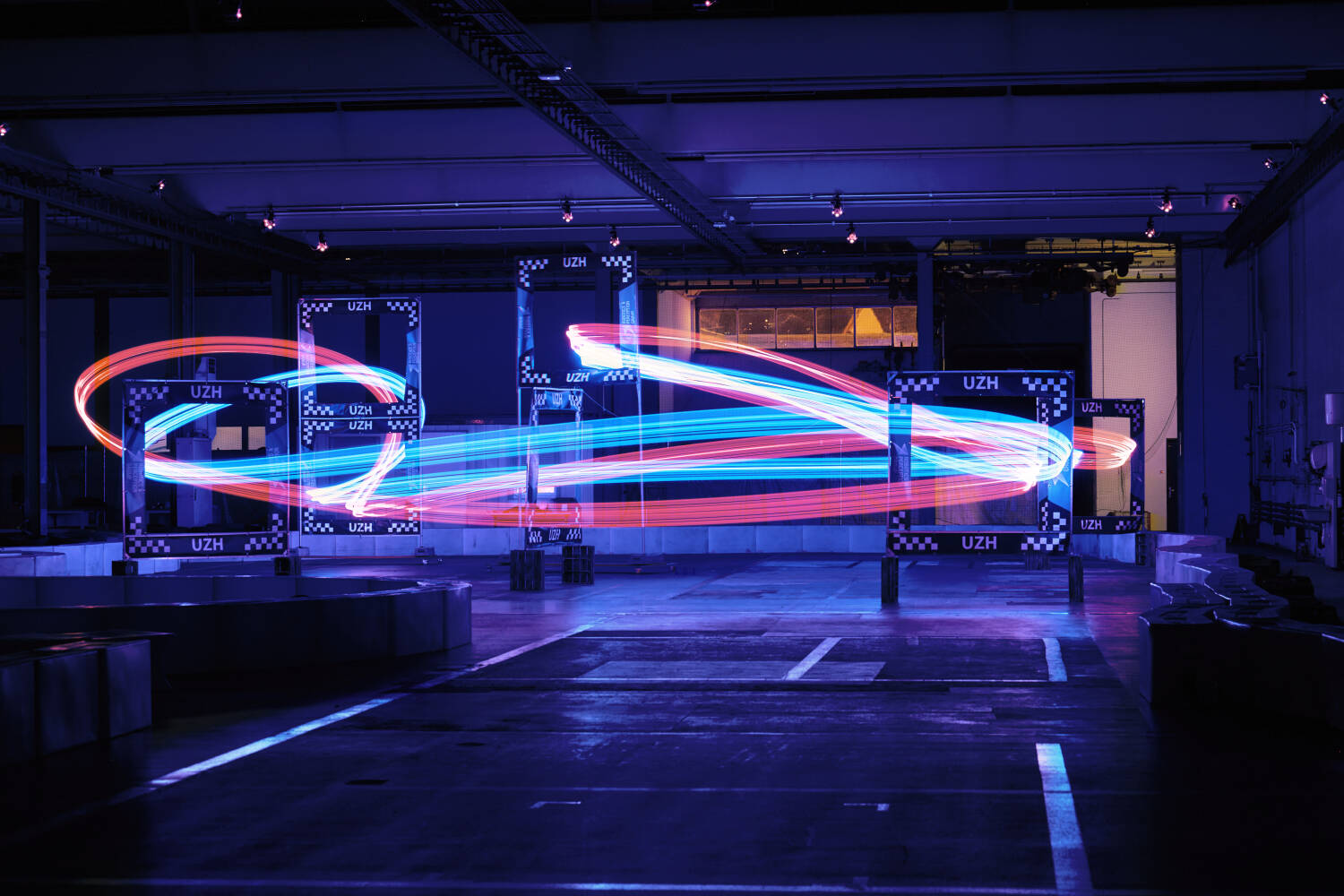

Champion-level Drone Racing using Deep Reinforcement Learning

First-person view (FPV) drone racing is a televised sport in which professional competitors pilot high-speed aircraft through a three-dimensional circuit. Each pilot sees the environment from their drone's perspective via video streamed from an onboard camera. Reaching the level of professional pilots with an autonomous drone is challenging since the robot needs to fly at its physical limits while estimating its speed and location in the circuit exclusively from onboard sensors. Here we introduce Swift, an autonomous system that can race physical vehicles at the level of the human world champions. The system combines deep reinforcement learning in simulation with data collected in the physical world. Swift competed against three human champions, including the world champions of two international leagues, in real-world head-to-head races. Swift won multiple races against each of the human champions and demonstrated the fastest recorded race time. This work represents a milestone for mobile robotics and machine intelligence, which may inspire the deployment of hybrid learning-based solutions in other physical systems.

References

Champion-level Drone Racing using Deep Reinforcement Learning

Nature, 2023

Autonomous Power Line Inspection with Drones via Perception-Aware MPC

Drones have the potential to revolutionize power line inspection by increasing productivity, reducing inspection time, improving data quality, and eliminating the risks for human operators. Current state-of-the-art systems for power line inspection have two shortcomings: (i) control is decoupled from perception and needs accurate information about the location of the power lines and masts; (ii) collision avoidance is decoupled from the power line tracking, which results in poor tracking in the vicinity of the power masts, and, consequently, in decreased data quality for visual inspection. In this work, we propose a model predictive controller (MPC) that overcomes these limitations by tightly coupling perception and action. Our controller generates commands that maximize the visibility of the power lines while, at the same time, safely avoiding the power masts. For power line detection, we propose a lightweight learning-based detector that is trained only on synthetic data and is able to transfer zero-shot to real-world power line images. We validate our system in simulation and real-world experiments on a mock-up power line infrastructure.

References

Learning Deep Sensorimotor Policies for Vision-based Autonomous Drone Racing

Autonomous drones can operate in remote and unstructured environments, enabling various real-world applications. However, the lack of effective vision-based algorithms has been a stumbling block to achieving this goal. Existing systems often require hand-engineered components for state estimation, planning, and control. Such a sequential design involves laborious tuning, human heuristics, and compounding delays and errors. This paper tackles the vision-based autonomous-drone racing problem by learning deep sensorimotor policies. We use contrastive learning to extract robust feature representations from the input images and leverage a two-stage learning-by-cheating framework for training a neural network policy. The resulting policy directly infers control commands with feature representations learned from raw images, forgoing the need for globally-consistent state estimation, trajectory planning, and handcrafted control design. Our experimental results indicate that our vision-based policy can achieve the same level of racing performance as the state-based policy while being robust against different visual disturbances and distractors. This work serves as a stepping-stone toward developing intelligent vision-based autonomous systems that control the drone purely from image inputs, like human pilots.

References

HDVIO: Improving Localization and Disturbance Estimation with Hybrid Dynamics VIO

Visual-inertial odometry (VIO) is the most common approach for estimating the state of autonomous micro aerial vehicles using only onboard sensors. Existing methods improve VIO performance by including a dynamics model in the estimation pipeline. However, such methods degrade in the presence of low-fidelity vehicle models and continuous external disturbances, such as wind. Our proposed method, HDVIO, overcomes these limitations by using a hybrid dynamics model that combines a point-mass vehicle model with a learning-based component that captures complex aerodynamic effects. HDVIO estimates the external force and the full robot state by leveraging the discrepancy between the actual motion and the predicted motion of the hybrid dynamics model. Our hybrid dynamics model uses a history of thrust and IMU measurements to predict the vehicle dynamics. To demonstrate the performance of our method, we present results on both public and novel drone dynamics datasets and show real-world experiments of a quadrotor flying in strong winds up to 25 km/h. The results show that our approach improves the motion and external force estimation compared to the state-of-the-art by up to 33% and 40%, respectively. Furthermore, differently from existing methods, we show that it is possible to predict the vehicle dynamics accurately while having no explicit knowledge of its full state.

References

Agilicious: Open-Source and Open-Hardware Agile Quadrotor for Vision-Based Flight

We are excited to present Agilicious, a co-designed hardware and software framework tailored to autonomous, agile quadrotor flight. It is completely open-source and open-hardware and supports both model-based and neural-network-based controllers. Also, it provides high thrust-to-weight and torque-to-inertia ratios for agility, onboard vision sensors, GPU-accelerated compute hardware for real-time perception and neural-network inference, a real-time flight controller, and a versatile software stack. In contrast to existing frameworks, Agilicious offers a unique combination of flexible software stack and high-performance hardware. We compare Agilicious with prior works and demonstrate it on different agile tasks, using both modelbased and neural-network-based controllers. Our demonstrators include trajectory tracking at up to 5 g and 70 km/h in a motion-capture system, and vision-based acrobatic flight and obstacle avoidance in both structured and unstructured environments using solely onboard perception. Finally, we demonstrate its use for hardware-in-the-loop simulation in virtual-reality environments. Thanks to its versatility, we believe that Agilicious supports the next generation of scientific and industrial quadrotor research. For more details check our paper, video and webpage.

References

Learning Perception-Aware Agile Flight in Cluttered Environments

Recently, neural control policies have outperformed existing model-based planning-and-control methods for autonomously navigating quadrotors through cluttered environments in minimum time. However, they are not perception aware, a crucial requirement in vision-based navigation due to the camera's limited field of view and the underactuated nature of a quadrotor. We propose a method to learn neural network policies that achieve perception-aware, minimum-time flight in cluttered environments. Our method combines imitation learning and reinforcement learning (RL) by leveraging a privileged learning-by-cheating framework. Using RL, we first train a perception-aware teacher policy with full-state information to fly in minimum time through cluttered environments. Then, we use imitation learning to distill its knowledge into a vision-based student policy that only perceives the environment via a camera. Our approach tightly couples perception and control, showing a significant advantage in computation speed (10x faster) and success rate. We demonstrate the closed-loop control performance using a physical quadrotor and hardware-in-the-loop simulation at speeds up to 50 km/h.

References

Visual Attention Prediction Improves Performance of Autonomous Drone Racing Agents

Humans race drones faster than neural networks trained for end-to-end autonomous flight. This may be related to the ability of human pilots to select task-relevant visual information effectively. This work investigates whether neural networks capable of imitating human eye gaze behavior and attention can improve neural network performance for the challenging task of vision-based autonomous drone racing. We hypothesize that gaze-based attention prediction can be an efficient mechanism for visual information selection and decision making in a simulator-based drone racing task. We test this hypothesis using eye gaze and flight trajectory data from 18 human drone pilots to train a visual attention prediction model. We then use this visual attention prediction model to train an end-to-end controller for vision-based autonomous drone racing using imitation learning. We compare the drone racing performance of the attention-prediction controller to those using raw image inputs and image-based abstractions (i.e., feature tracks). Comparing success rates for completing a challenging race track by autonomous flight, our results show that the attention-prediction based controller (88% success rate) outperforms the RGB-image (61% success rate) and feature-tracks (55% success rate) controller baselines. Furthermore, visual attention-prediction and feature-track based models showed better generalization performance than image-based models when evaluated on hold-out reference trajectories. Our results demonstrate that human visual attention prediction improves the performance of autonomous vision-based drone racing agents and provides an essential step towards vision-based, fast, and agile autonomous flight that eventually can reach and even exceed human performances.

References

A Benchmark Comparison of Learned Control Policies for Agile Quadrotor Flight

Quadrotors are highly nonlinear dynamical systems that require carefully tuned controllers to be pushed to their physical limits. Recently, learning-based control policies have been proposed for quadrotors, as they would potentially allow learning direct mappings from high-dimensional raw sensory observations to actions. Due to sample inefficiency, training such learned controllers on the real platform is impractical or even impossible. Training in simulation is attractive but requires to transfer policies between domains, which demands trained policies to be robust to such domain gap. In this work, we make two contributions: (i) we perform the first benchmark comparison of existing learned control policies for agile quadrotor flight and show that training a control policy that commands body-rates and thrust results in more robust sim-to-real transfer compared to a policy that directly specifies individual rotor thrusts, (ii) we demonstrate for the first time that such a control policy trained via deep reinforcement learning can control a quadrotor in real-world experiments at speeds over 45km/h.

References

Learning High-Speed Flight in the Wild

Quadrotors are agile. Unlike most other machines, they can traverse extremely complex environments at high speeds. To date, only expert human pilots have been able to fully exploit their capabilities. Autonomous operation with onboard sensing and computation has been limited to low speeds. State-of-the-art methods generally separate the navigation problem into subtasks: sensing, mapping, and planning. While this approach has proven successful at low speeds, the separation it builds upon can be problematic for high-speed navigation in cluttered environments. Indeed, the subtasks are executed sequentially, leading to increased processing latency and a compounding of errors through the pipeline. Here we propose an end-to-end approach that can autonomously fly quadrotors through complex natural and man-made environments at high speeds, with purely onboard sensing and computation. The key principle is to directly map noisy sensory observations to collision-free trajectories in a receding-horizon fashion. This direct mapping drastically reduces processing latency and increases robustness to noisy and incomplete perception. The sensorimotor mapping is performed by a convolutional network that is trained exclusively in simulation via privileged learning: imitating an expert with access to privileged information. By simulating realistic sensor noise, our approach achieves zero-shot transfer from simulation to challenging real-world environments that were never experienced during training: dense forests, snow-covered terrain, derailed trains, and collapsed buildings. Our work demonstrates that end-to-end policies trained in simulation enable high-speed autonomous flight through challenging environments, outperforming traditional obstacle avoidance pipelines. We release the code open source.

References

Learning High-Speed Flight in the Wild

Science Robotics, 2021.

Human-Piloted Drone Racing: Visual Processing and Control

Humans race drones faster than algorithms, despite being limited to a fixed camera angle, body rate control, and response latencies in the order of hundreds of milliseconds. A better understanding of the ability of human pilots of selecting appropriate motor commands from highly dynamic visual information may provide key insights for solving current challenges in vision-based autonomous navigation. This paper investigates the relationship between human eye movements, control behavior, and flight performance in a drone racing task. We collected a multimodal dataset from 21 experienced drone pilots using a highly realistic drone racing simulator, also used to recruit professional pilots. Our results show task-specific improvements in drone racing performance over time. In particular, we found that eye gaze tracks future waypoints (i.e., gates), with first fixations occurring on average 1.5 seconds and 16 meters before reaching the gate. Moreover, human pilots consistently looked at the inside of the future flight path for lateral (i.e., left and right turns) and vertical maneuvers (i.e., ascending and descending). Finally, we found a strong correlation between pilots eye movements and the commanded direction of quadrotor flight, with an average visual-motor response latency of 220 ms. These results highlight the importance of coordinated eye movements in human-piloted drone racing. We make our dataset publicly available.

References

Data-Driven MPC for Quadrotors

Aerodynamic forces render accurate high-speed trajectory tracking with quadrotors extremely challenging. These complex aerodynamic effects become a significant disturbance at high speeds, introducing large positional tracking errors, and are extremely difficult to model. To fly at high speeds, feedback control must be able to account for these aerodynamic effects in real-time. This necessitates a modelling procedure that is both accurate and efficient to evaluate. Therefore, we present an approach to model aerodynamic effects using Gaussian Processes, which we incorporate into a Model Predictive Controller to achieve efficient and precise real-time feedback control, leading to up to 70% reduction in trajectory tracking error at high speeds. We verify our method by extensive comparison to a state-of-the-art linear drag model in synthetic and real-world experiments at speeds of up to 14m/s and accelerations beyond 4g.

References

Flightmare: A Flexible Quadrotor Simulator

Currently available quadrotor simulators have a rigid and highly-specialized structure: either are they really fast, physically accurate, or photo-realistic. In this work, we propose a paradigm-shift in the development of simulators: moving the trade-off between accuracy and speed from the developers to the end-users. We release a new modular quadrotor simulator: Flightmare. Flightmare is composed of two main components: a configurable rendering engine built on Unity and a flexible physics engine for dynamics simulation. Those two components are totally decoupled and can run independently from each other. Flightmare comes with several desirable features: (i) a large multi-modal sensor suite, including an interface to extract the 3D point-cloud of the scene; (ii) an API for reinforcement learning which can simulate hundreds of quadrotors in parallel; and (iii) an integration with a virtual-reality headset for interaction with the simulated environment. Flightmare can be used for various applications, including path-planning, reinforcement learning, visual-inertial odometry, deep learning, human-robot interaction, etc.

References

Flightmare: A Flexible Quadrotor Simulator

Conference on Robot Learning (CoRL), 2020

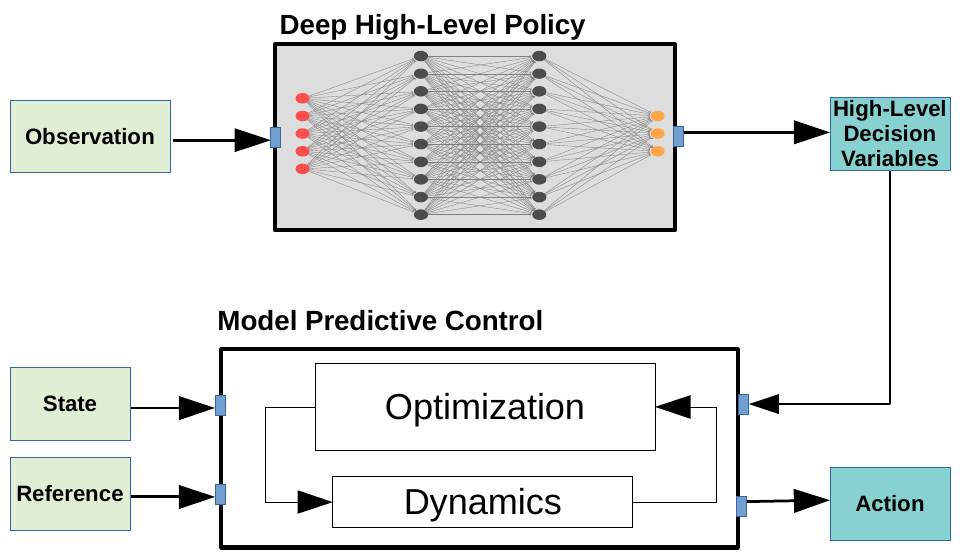

Learning High-Level Policies for Model Predictive Control

The combination of policy search and deep neural networks holds the promise of automating a variety of decision- making tasks. Model Predictive Control (MPC) provides robust solutions to robot control tasks by making use of a dynamical model of the system and solving an optimization problem online over a short planning horizon. In this work, we leverage probabilistic decision-making approaches and the generalization capability of artificial neural networks to the powerful online optimization by learning a deep high-level policy for the MPC (High-MPC). Conditioning on robot’s local observations, the trained neural network policy is capable of adaptively selecting high-level decision variables for the low-level MPC controller, which then generates optimal control commands for the robot. First, we formulate the search of high-level decision variables for MPC as a policy search problem, specifically, a probabilistic inference problem. The problem can be solved in a closed-form solution. Second, we propose a self-supervised learning algorithm for learning a neural network high-level policy, which is useful for online hyperparameter adaptations in highly dynamic environments. We demonstrate the importance of incorporating the online adaption into autonomous robots by using the proposed method to solve a challenging control problem, where the task is to control a simulated quadrotor to fly through a swinging gate. We show that our approach can handle situations that are difficult for standard MPC.

References

Deep Drone Acrobatics

Performing acrobatic maneuvers with quadrotors is extremely challenging. Acrobatic flight requires high thrust and extreme angular accelerations that push the platform to its physical limits. Professional drone pilots often measure their level of mastery by flying such maneuvers in competitions. In this paper, we propose to learn a sensorimotor policy that enables an autonomous quadrotor to fly extreme acrobatic maneuvers with only onboard sensing and computation. We train the policy entirely in simulation by leveraging demonstrations from an optimal controller that has access to privileged information. We use appropriate abstractions of the visual input to enable transfer to a real quadrotor. We show that the resulting policy can be directly deployed in the physical world without any fine-tuning on real data. Our methodology has several favorable properties: it does not require a human expert to provide demonstrations, it cannot harm the physical system during training, and it can be used to learn maneuvers that are challenging even for the best human pilots. Our approach enables a physical quadrotor to fly maneuvers such as the Power Loop, the Barrel Roll, and the Matty Flip, during which it incurs accelerations of up to 3g.

References

Deep Drone Acrobatics

Robotics: Science and Systems (RSS), 2020.

AlphaPilot: Autonomous Drone Racing

We present a novel system for autonomous, vision-based drone racing combining learned data abstraction, nonlinear filtering, and time-optimal trajectory planning. The system has successfully been deployed at the first autonomous drone racing world championship: the 2019 AlphaPilot Challenge. Contrary to traditional drone racing systems, that only detect the next gate, our approach makes use of any visible gate and takes advantage of multiple, simultaneous gate detections to compensate for drift in the state estimate and build a global map of the gates. The global map and drift-compensated state estimate allow the drone to navigate through the race course even when the gates are not immediately visible and further enable to plan a near time-optimal path through the race course in real time based on approximate drone dynamics. The proposed system has been demonstrated to successfully guide the drone through tight race courses reaching speeds up to 8m/s and has led to rank second at the 2019 AlphaPilot Challenge.

References

AlphaPilot: Autonomous Drone Racing

Robotics: Science and Systems (RSS), 2020

Best Systems Paper Award!

Towards Low-Latency High-Bandwidth Control of Quadrotors using Event Cameras

Event cameras are a promising candidate to enable high speed vision-based control due to their low sensor latency and high temporal resolution. However, purely event-based feedback has yet to be used in the control of drones. In this work, a first step towards implementing low-latency high-bandwidth control of quadrotors using event cameras is taken. In particular, this paper addresses the problem of one-dimensional attitude tracking using a dualcopter platform equipped with an event camera. The event-based state estimation consists of a modified Hough transform algorithm combined with a Kalman filter that outputs the roll angle and angular velocity of the dualcopter relative to a horizon marked by a black-and-white disk. The estimated state is processed by a proportional-derivative attitude control law that computes the rotor thrusts required to track the desired attitude. The proposed attitude tracking scheme shows promising results of event-camera-driven closed loop control: the state estimator performs with an update rate of 1 kHz and a latency determined to be 12 ms, enabling attitude tracking at speeds of over 1600 degrees per second.

References

Towards Low-Latency High-Bandwidth Control of Quadrotors using Event Cameras

IEEE International Conference on Robotics and Automation (ICRA), 2020

Dynamic Obstacle Avoidance for Quadrotors with Event Cameras

Today's autonomous drones have reaction times of tens of milliseconds, which is not enough for navigating fast in complex dynamic environments. To safely avoid fast moving objects, drones need low-latency sensors and algorithms. We departed from state-of-the-art approaches by using event cameras, which are bioinspired sensors with reaction times of microseconds. Our approach exploits the temporal information contained in the event stream to distinguish between static and dynamic objects and leverages a fast strategy to generate the motor commands necessary to avoid the approaching obstacles. Standard vision algorithms cannot be applied to event cameras because the output of these sensors is not images but a stream of asynchronous events that encode per-pixel intensity changes. Our resulting algorithm has an overall latency of only 3.5 milliseconds, which is sufficient for reliable detection and avoidance of fast-moving obstacles. We demonstrate the effectiveness of our approach on an autonomous quadrotor using only onboard sensing and computation. Our drone was capable of avoiding multiple obstacles of different sizes and shapes, at relative speeds up to 10 meters/second, both indoors and outdoors.

References

Dynamic Obstacle Avoidance for Quadrotors with Event Cameras

Science Robotics, March 18, 2020.

Deep Drone Racing: Learning Agile Flight in Dynamic Environments

Dynamically changing environments, unreliable state estimation, and operation under severe resource constraints are fundamental challenges for robotics, which still limit the deployment of small autonomous drones. We address these challenges in the context of autonomous, vision-based drone racing in dynamic environments. A racing drone must traverse a track with possibly moving gates at high speed. We enable this functionality by combining the performance of a state-of-the-art path-planning and control system with the perceptual awareness of a convolutional neural network (CNN). The CNN directly maps raw images to a desired waypoint and speed. Given the CNN output, the planner generates a short minimum-jerk trajectory segment that is tracked by a model-based controller to actuate the drone towards the waypoint. The resulting modular system has several desirable features: (i) it can run fully on-board, (ii) it does not require globally consistent state estimation, and (iii) it is both platform and domain independent. We extensively test the precision and robustness of our system, both in simulation and on a physical platform. In both domains, our method significantly outperforms the prior state of the art. In order to understand the limits of our approach, we additionally compare against professional human drone pilots with different skill levels.

References

Deep Drone Racing: From Simulation to Reality with Domain Randomization

IEEE Transactions on Robotics, 2019

PDF YouTube 1 YouTube 2 Code

Deep Drone Racing: Learning Agile Flight in Dynamic Environments

Conference on Robotic Learning (CoRL), Zurich, 2018.

Best Systems Paper Award!

Oral Presentation. Oral Acceptance Rate: 4.0%.

PDF YouTube

Beauty and the Beast: Optimal Methods Meet Learning for Drone Racing.

This approach was used to win the IROS 2018 Autonomous Drone Race.

IEEE International Conference on Robotics and Automation (ICRA), 2019.

PDF YouTube

Are We Ready for Autonomous Drone Racing? The UZH-FPV Drone Racing Dataset

IEEE International Conference on Robotics and Automation (ICRA), 2019.

The Foldable Drone: A Morphing Quadrotor that can Squeeze and Fly

The recent advances in state estimation, perception, and navigation algorithms have significantly contributed to the ubiquitous use of quadrotors for inspection, mapping, and aerial imaging. To further increase the versatility of quadrotors, recent works investigated the use of an adaptive morphology, which consists of modifying the shape of the vehicle during flight to suit a specific task or environment. However, these works either increase the complexity of the platform or decrease its controllability. In this paper, we propose a novel, simpler, yet effective morphing design for quadrotors consisting of a frame with four independently rotating arms that fold around the main frame. To guarantee stable flight at all times, we exploit an optimal control strategy that adapts on the fly to the drone morphology. We demonstrate the versatility of the proposed adaptive morphology in different tasks, such as negotiation of narrow gaps, close inspection of vertical surfaces, and object grasping and transportation. The experiments are performed on an actual, fully autonomous quadrotor relying solely on onboard visual-inertial sensors and compute. No external motion tracking systems and computers are used. This is the first work showing stable flight without requiring any symmetry of the morphology.

References

The Foldable Drone: A Morphing Quadrotor that can Squeeze and Fly

IEEE Robotics and Automation Letters (RA-L), 2018.

Perception Aware Navigation

References

PAMPC: Perception-Aware Model Predictive Control for Quadrotors

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, 2018.

Perception-aware Receding Horizon Navigation for MAVs

IEEE International Conference on Robotics and Automation, 2018.

Appearance-based Active, Monocular, Dense Reconstruction for Micro Aerial Vehicles

Robotics: Science and Systems, Berkely, 2014.

DroNet: Learning to Fly by Driving

References

DroNet: Learning to Fly by Driving

IEEE Robotics and Automation Letters (RA-L), 2018.

PDF YouTube Software and DatasetsFlying in Low Light thanks to Event Cameras

In this paper, we present the first state estimation pipeline that leverages the complementary advantages of a standard camera with an event camera by fusing in a tightly-coupled manner events, standard frames, and inertial measurements. We show on the Event Camera Dataset that our hybrid pipeline leads to an accuracy improvement of 130% over event-only pipelines, and 85% over standard-frames only visual-inertial systems, while still being computationally tractable.

Furthermore, we use our pipeline to demonstrate - to the best of our knowledge - the first autonomous quadrotor flight using an event camera for state estimation, unlocking flight scenarios that were not reachable with traditional visual inertial odometry, such as low-light environments and high dynamic range scenes.

References

Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High Speed Scenarios

IEEE Robotics and Automation Letters (RA-L), 2018.

Vision-based Autonomous Quadrotor Landing on a Moving Platform

References

Exploration with Multi-Rotors

References

Autonomous, Vision-based Flight and Live Dense 3D Mapping with Quadrotors

In the right video, we demonstrate several capabilities of our vision-controlled quadrotors, such as autonomous way-point navigation, live, dense 3D reconstruction, and aerial-guided collaborative grasping with a ground robot. Our visual-odometry pipeline (SVO) runs in real-time onboard the quadrotor. The MAV navigates autonomously along predefinied way-points. No GPS, nor teleoperation, no motion capture system were used throughout the flight. Our live, dense 3D reconstructin algorithm runs REMODE, our REgularized MOnocular DEnse reconstruction algorithm.

References

Autonomous, Vision-based Flight and Live Dense 3D Mapping with a Quadrotor Micro Aerial Vehicle

Journal of Field Robotics, 2016.

SVO: Fast Semi-Direct Monocular Visual Odometry

IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014.

Continuous On-Board Monocular-Vision-based Elevation Mapping Applied to Autonomous Landing of Micro Aerial Vehicles

References

MAV Urban Localization from Google Street View Data

References

Air-ground Matching: Appearance-based GPS-denied Urban Localization of Micro Aerial Vehicles

Journal of Field Robotics, 2015.

Micro Air Vehicle Localization and Position Tracking from Textured 3D Cadastral Models

IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014.

MAV Urban Localization from Google Street View Data

IROS'13, IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS'13, 2013.

SFly: Swarm of Micro Flying Robots (EU Project: 2009-2012)

References

Vision-Controlled Micro Flying Robots: from System Design to Autonomous Navigation and Mapping in GPS-denied Environments.

IEEE Robotics and Automation Magazine, Vol. 21, Issue 3., 2014.